The AI space is absolutely roaring about Anthropic's latest release of Claude Opus 4.6 today. If you know Claude then you know the hype is real! This tweet really says it all…

This upgrade brings meaningful improvements across agentic workflows and reasoning tasks while showing some unexpected trade-offs in certain benchmarks. What makes these powerful upgrades even more exciting is that Opus 4.6 is the first Opus-class model with a 1M token context window. Together, these gains translate into agents that can operate over far larger problems without losing context.

The model is available now through Anthropic's API, major cloud providers, and best of all on Vellum!

💡 Want to see how Claude Opus 4.6 compares to the other leading models for your use case? Compare them in Vellum!

Key observations from benchmarks

While benchmarks are inherently limited and may not fully capture real-world utility, they are our only quantifiable way to measure progress. From the reported data, we can conclude a few things:

- Agentic capabilities shine: The standout results are in agentic tasks. 65.4% on Terminal-Bench 2.0, 72.7% on OSWorld (computer use), 91.9% on τ2-bench Retail, and a massive 84.0% on BrowseComp search. These represent significant leaps over Opus 4.5 and competing models in practical agent workflows.

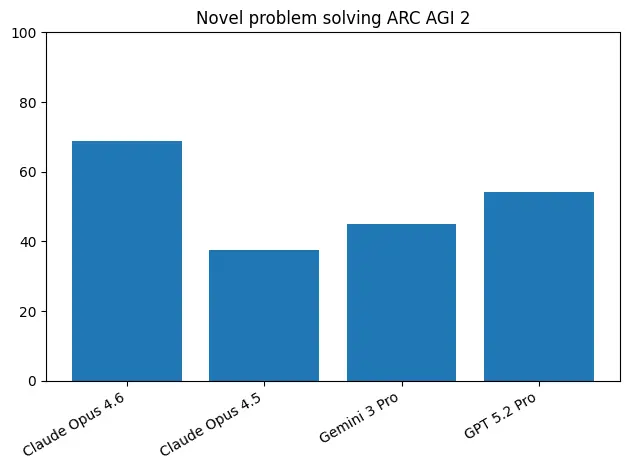

- Novel problem-solving dominance: The 68.8% score on ARC AGI 2 nearly doubles Opus 4.5's 37.6% and crushes Gemini 3 Pro's 45.1%, indicating a major step forward in abstract reasoning capability.

- Multidisciplinary reasoning leads without tools: 40.0% on Humanity's Last Exam (without tools) beats Opus 4.5 30.8% and Gemini 3 Pro 37.5%, though GPT-5.2 still holds the crown at 50.0% with Pro.

- Coding trade-off: Interestingly, Opus 4.6 scores 80.8% on SWE-bench Verified, a slight dip from Opus 4.5's 80.9%, suggesting optimization focused elsewhere.

- Visual reasoning improvements: 73.9% without tools and 77.3% with tools on MMMU Pro shows steady progress, though still trailing GPT-5.2's 79.5%/80.4%.

Coding and Software Engineering

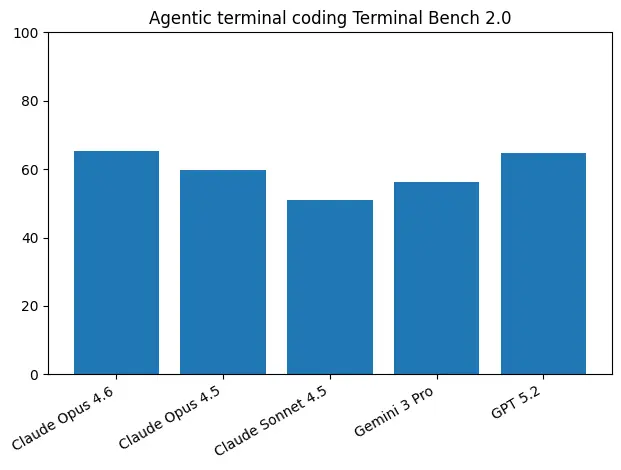

Agentic terminal coding (Terminal-Bench 2.0)

Terminal-Bench evaluates a model's ability to navigate command-line environments, execute shell commands, and perform development operations.

Claude Opus 4.6 scores 65.4% on Terminal-Bench 2.0, a substantial improvement over Opus 4.5 59.8% and ahead of Sonnet 4.5 51.0% and Gemini 3 Pro 56.2%. However, it still trails GPT-5.2's impressive 64.7% (self-reported via Codex CLI). This represents the strongest performance in Anthropic's lineup for command-line proficiency.

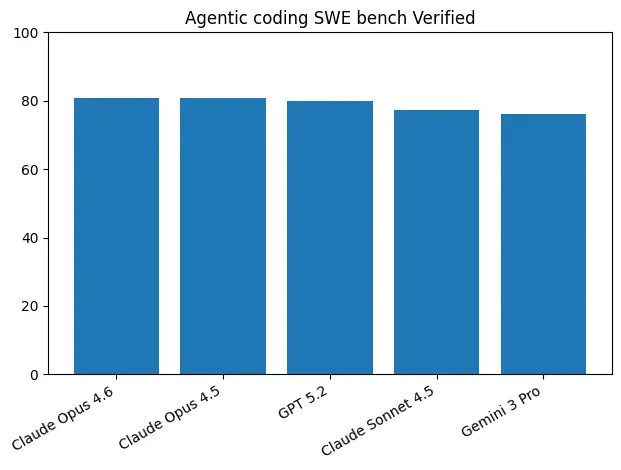

Agentic coding (SWE-bench Verified)

SWE-bench Verified tests real-world software engineering by evaluating models on their ability to resolve actual GitHub issues across production codebases.

Opus 4.6 achieves 80.8% on SWE-bench Verified, essentially matching Opus 4.5's 80.9% and GPT-5.2's 80.0%, while outperforming Sonnet 4.5 77.2% and Gemini 3 Pro 76.2%. This near-parity with its predecessor suggests Anthropic prioritized other capabilities in this iteration while maintaining elite coding performance.

Agentic Tool Use and Orchestration

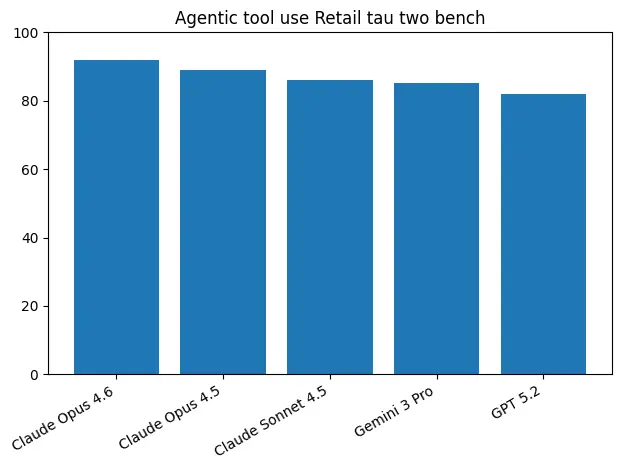

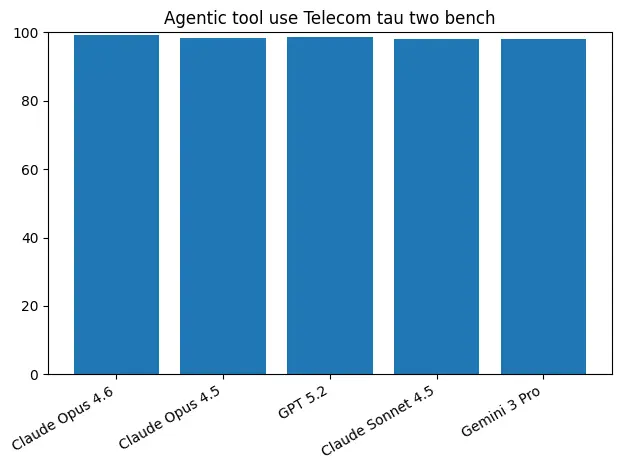

Agentic tool use (τ2-bench)

The τ2-bench evaluates sophisticated tool-calling capabilities across two domains: Retail (consumer scenarios) and Telecom (enterprise support). This benchmark tests multi-step planning and accurate function invocation.

Opus 4.6 achieves remarkable scores: 91.9% on Retail and 99.3% on Telecom. The Retail score surpasses Opus 4.5 88.9%, Sonnet 4.5 86.2%, Gemini 3 Pro 85.3%, and GPT-5.2 82.0%.

On Telecom, it edges out Opus 4.5 98.2% and GPT-5.2 98.7%, while matching Sonnet 4.5 and Gemini 3 Pro both at 98.0%. These results position Opus 4.6 as the strongest model for complex tool orchestration.

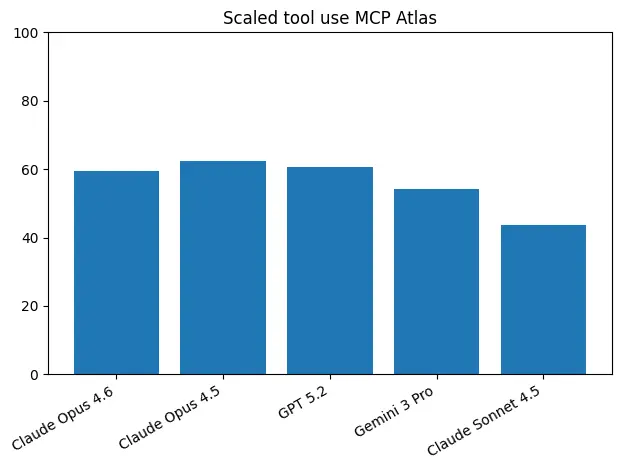

Scaled tool use (MCP Atlas)

MCP Atlas tests a model's ability to handle tool use at scale, evaluating performance when coordinating many tools simultaneously. This benchmark is very useful for choosing a model to run an agent with many tools.

Opus 4.6 scores 59.5% on MCP Atlas, falling behind Opus 4.5's 62.3% and GPT-5.2's 60.6%, but ahead of Sonnet 4.5 43.8% and Gemini 3 Pro 54.1%. This dip from the previous version is one of the few areas where Opus 4.6 regresses, suggesting potential trade-offs in how the model handles highly scaled tool coordination.

Computer and Environment Interaction

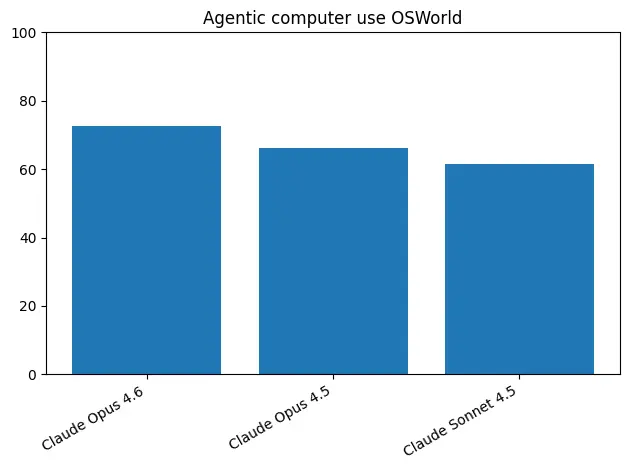

Agentic computer use (OSWorld)

OSWorld evaluates a model's ability to control computers through GUI interactions, simulating real desktop automation tasks.

Claude Opus 4.6 delivers 72.7% on OSWorld, a significant jump from Opus 4.5's 66.3% and well ahead of Sonnet 4.5's 61.4%. This benchmark wasn't reported for Gemini 3 Pro or GPT-5.2, making direct comparison impossible, but the 6.4 percentage point improvement over its predecessor is notable for practical automation workflows.

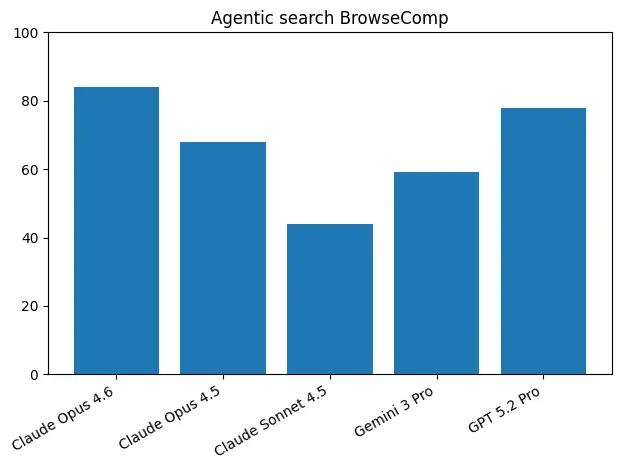

Agentic search (BrowseComp)

BrowseComp evaluates web browsing and search capabilities, testing a model's ability to navigate websites, extract information, and complete multi-step research tasks.

Opus 4.6 dominates with 84.0% on BrowseComp, a massive leap from Opus 4.5's 67.8% and crushing Sonnet 4.5's 43.9%. It also beats Gemini 3 Pro 59.2% (Deep Research) and GPT-5.2 Pro 77.9%. This 16.2 percentage point improvement over its predecessor makes Opus 4.6 the clear leader for agentic web research and information gathering.

Reasoning and General Intelligence

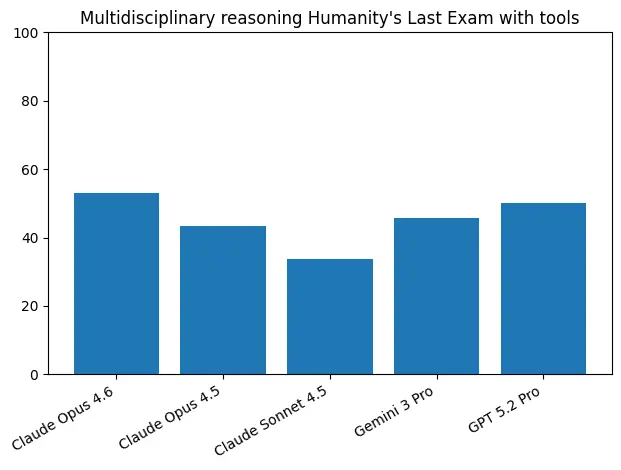

Multidisciplinary reasoning (Humanity's Last Exam)

Humanity's Last Exam tests frontier reasoning across diverse academic disciplines, designed to challenge even the most capable models with questions requiring deep understanding and synthesis.

Opus 4.6 scores 40.0% without tools and 53.1% with tools, improving significantly over Opus 4.5's 30.8%/43.4% and Sonnet 4.5's 17.7%/33.6%. It edges out Gemini 3 Pro's 37.5%/45.8% on the without-tools benchmark but trails GPT-5.2's 36.6%/50.0% Pro when tools are enabled. The 9.2 percentage point gain without tools suggests meaningful improvements in core reasoning capacity.

Novel problem-solving (ARC AGI 2)

ARC AGI 2 tests abstract reasoning and pattern recognition on novel problems, designed to measure general intelligence rather than learned knowledge—one of the most challenging benchmarks for current AI systems.

Opus 4.6 scores an impressive 68.8% on ARC AGI 2, nearly doubling Opus 4.5's 37.6% and significantly outperforming Gemini 3 Pro 45.1% (Deep Thinking) and GPT-5.2 Pro 54.2%. This 31.2 percentage point leap represents one of the most dramatic improvements in the release and suggests a fundamental advancement in abstract reasoning capability.

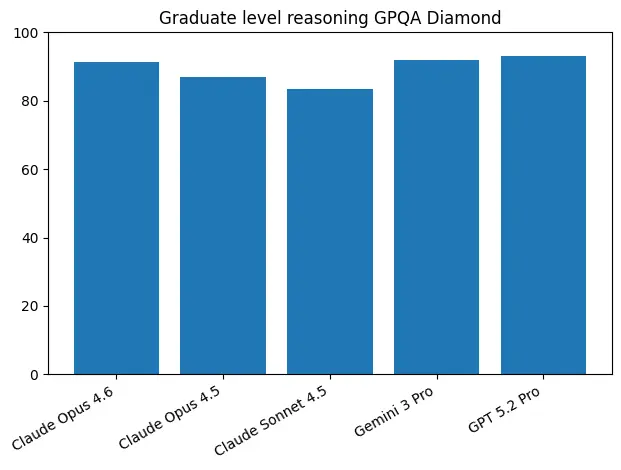

Graduate-level reasoning (GPQA Diamond)

GPQA Diamond evaluates expert-level scientific knowledge across physics, chemistry, and biology with PhD-level questions, testing both domain expertise and reasoning depth.

Opus 4.6 achieves 91.3% on GPQA Diamond, improving over Opus 4.5's 87.0% and Sonnet 4.5's 83.4%, while matching Gemini 3 Pro's 91.9% and trailing GPT-5.2 Pro's 93.2%. While this benchmark is approaching saturation, the 4.3 percentage point gain confirms continued progress in scientific reasoning.

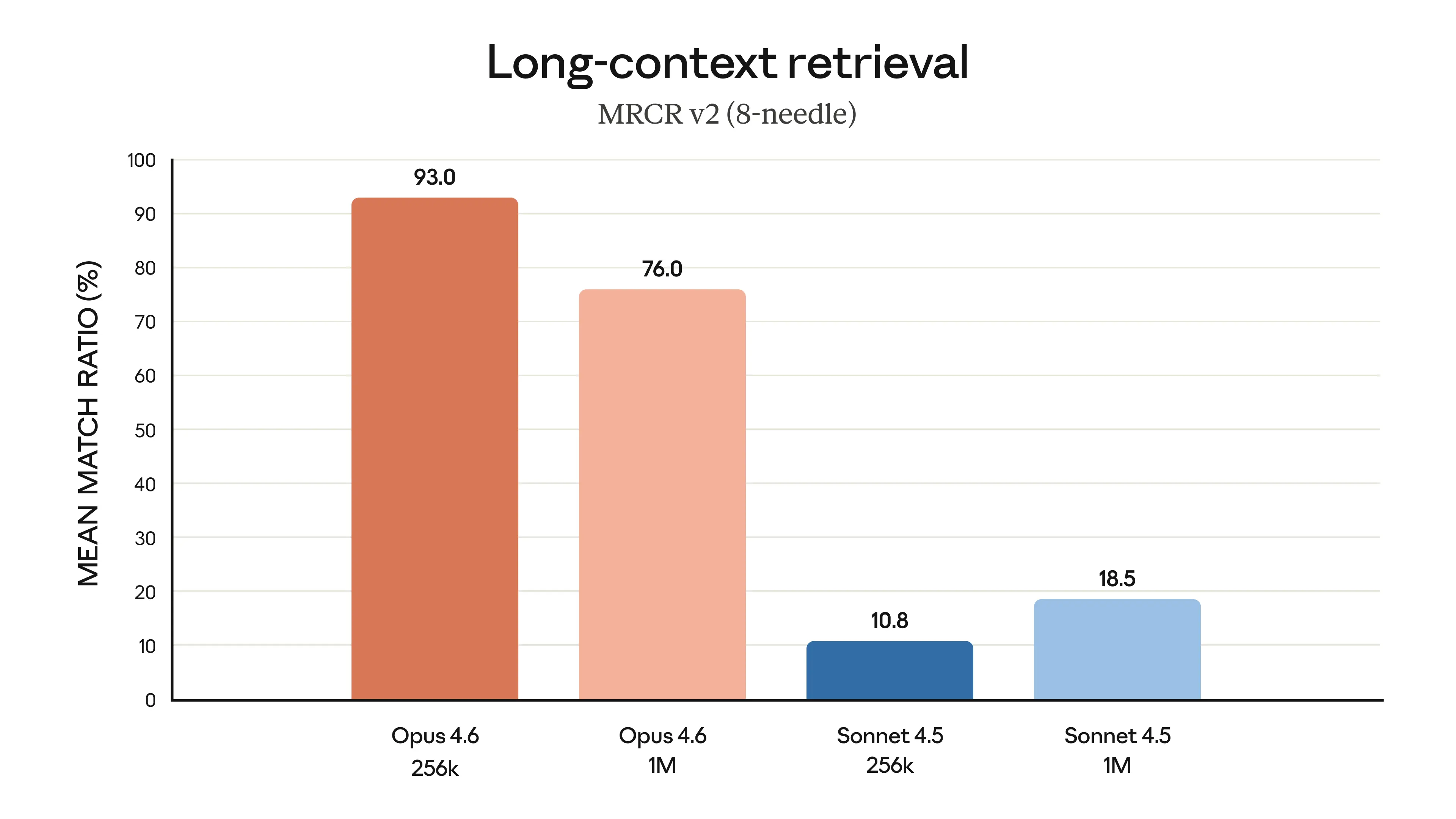

Long Context Capabilities

Long-context retrieval (MRCR v2, needle-in-a-haystack)

Large context windows only matter if a model can reliably retrieve the right information. MRCR v2 measures this by testing a model’s ability to find multiple specific facts buried deep within long inputs.

Opus 4.6 delivers strong long-context retrieval, scoring 93.0% at 256K and 76.0% at 1M context, far outperforming Sonnet 4.5 and demonstrating reliable recall even at extreme context lengths.

GPT-5.2 Thinking shows similarly strong retrieval, achieving 98% on the 4-needle test and 70% on the 8-needle test at 256K, and 85% mean match ratio at 128K. Gemini 3 Pro trails at 77% on the 8-needle benchmark. Together, these results show that Opus 4.6 and GPT-5.2 both pair large context windows with dependable retrieval, while Gemini’s performance degrades more noticeably as context scales.

Multimodal and Visual Reasoning

Visual reasoning (MMMU Pro)

MMMU Pro tests multimodal understanding by requiring models to reason about complex visual information across academic disciplines, evaluating both perception and analytical capabilities.

.webp)

Opus 4.6 scores 73.9% without tools and 77.3% with tools, improving from Opus 4.5's 70.6%/73.9% and Sonnet 4.5's 63.4%/68.9%. Gemini 3 Pro leads without tools at 81.0%, while GPT-5.2 tops the with-tools category at 80.4%. The gains here are steady but incremental compared to Opus 4.6's leaps in other areas.

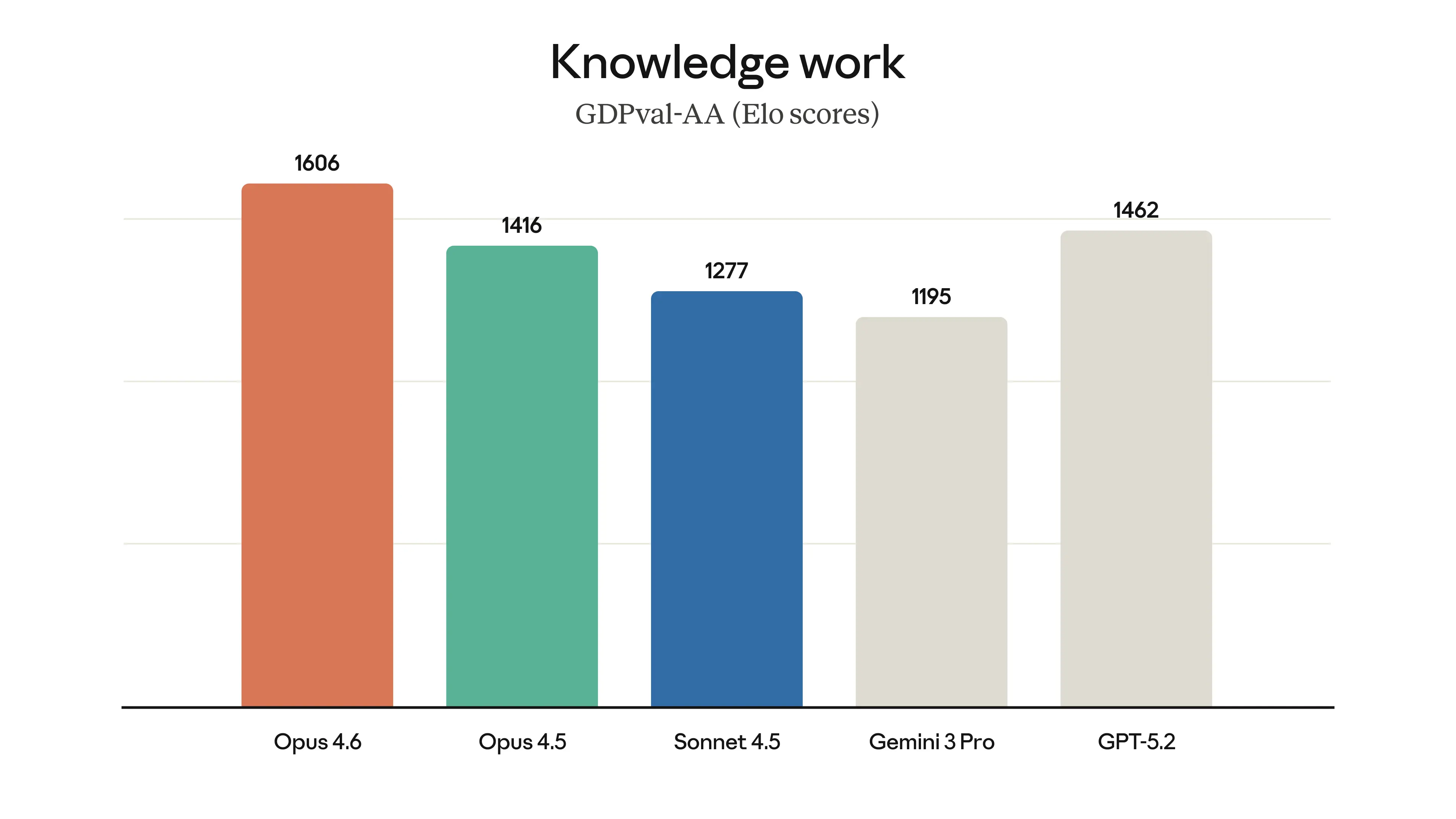

Knowledge Work and Domain-Specific Intelligence

Office tasks (GDPVal-AA Elo)

GDPVal-AA measures performance on knowledge work tasks using an Elo rating system, evaluating ability to produce real work products like presentations, spreadsheets, and documents.

Opus 4.6 scores 1606 Elo, ahead of Opus 4.5's 1416, GPT-5.2's 1462, Sonnet 4.5's 1277, and Gemini 3 Pro's 1195. This 190-point improvement over its predecessor indicates significantly better performance on long-horizon professional tasks requiring planning, execution, and coherent output across multiple steps.

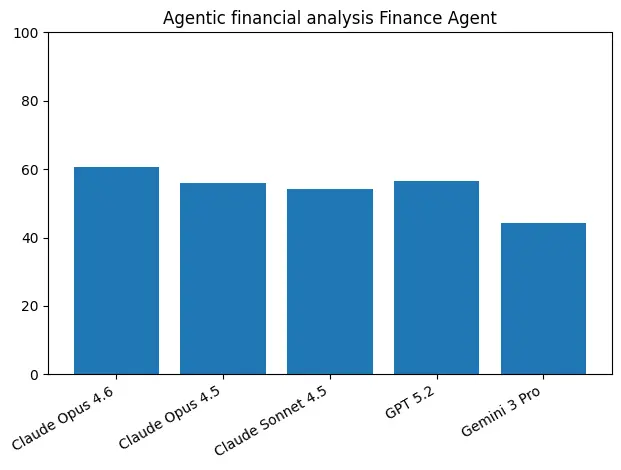

Agentic financial analysis (Finance Agent)

The Finance Agent benchmark evaluates performance on realistic financial analysis tasks, including data interpretation, calculation, and financial reasoning.

Opus 4.6 achieves 60.7% on this benchmark, outperforming Opus 4.5 55.9%, Sonnet 4.5 54.2%, GPT-5.2 56.6%, and Gemini 3 Pro 44.1%. This best-in-class result suggests strong practical utility for financial services applications, quantitative analysis, and business intelligence tasks.

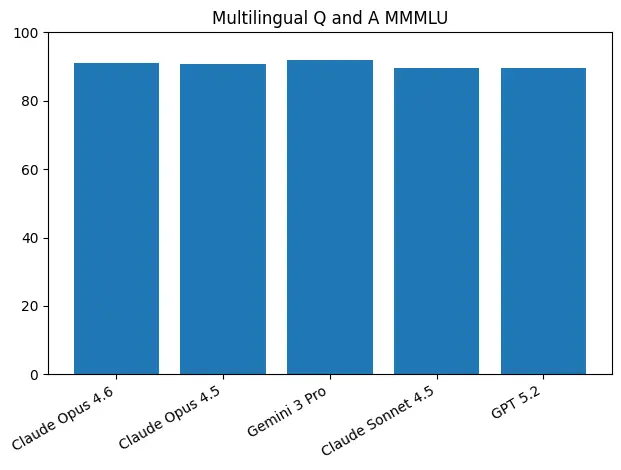

Multilingual Understanding

Multilingual Q&A (MMMLU)

MMMLU evaluates multilingual understanding and reasoning across languages, testing whether models maintain reasoning capability beyond English.

Opus 4.6 achieves 91.1% on MMMLU, matching Opus 4.5's 90.8% while trailing Gemini 3 Pro's impressive 91.8% and ahead of Sonnet 4.5's 89.5% and GPT-5.2's 89.6%. This near-parity across the Claude lineup suggests consistent multilingual capabilities across model sizes.

What's new and notable

Agent focused

Opus 4.6's dramatic improvements in computer use (+6.4pp), web search (+16.2pp), and terminal operations (+5.6pp) signal that Anthropic optimized specifically for practical agent deployments. The 84.0% BrowseComp score makes this the go-to model for research agents and information retrieval tasks.

Massive Leap in Abstract reasoning

The 68.8% ARC AGI 2 score—nearly double the previous version—represents one of the largest single-benchmark improvements we've seen in a frontier model update. This isn't just benchmark optimization; it suggests genuine advances in novel problem-solving that should translate to better performance on tasks the model hasn't explicitly been trained for.

MCP Atlas regression

The drop from 62.3% to 59.5% on scaled tool use is one of the few areas where Opus 4.6 steps backward. For teams building agents that coordinate dozens of tools simultaneously, this trade-off matters and may require additional orchestration logic at the application layer.

Real work see strong gains

The 60.7% Finance Agent score and 1606 GDPVal Elo suggest this model excels at the kind of long-horizon, multi-step professional tasks that matter for enterprise deployments—from financial modeling to document generation.

Why this matters for your agents

Opus 4.6 is optimized for the most powerful agents. It’s better at the core tasks agents the best agents actually perform: using computers, running terminals, searching the web, and reasoning across long, multi-step workflows.

If you’re running agents for research, financial analysis, or knowledge work, Opus 4.6 is worth testing now. For large-scale tool orchestration, MCP Atlas is a known trade-off, but for many setups the gains elsewhere will outweigh it.

{{general-cta}}

Extra Resources

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

.png)