AI agents for your

boring ops tasks

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Trusted by companies of all sizes

.avif)

.avif)

Hey Vellum, automate my

SEO writing process

I want to build an agent that looks at keywords in Google Sheet, researches top ranking articles, and creates a draft for me in a Google Doc every day at 9am

Thinking:

Tools used:

I’ve built your SEO/GEO agent. Here’s what it does:

- Gets a keyword from a sheet

- Analyzes SEO intent and researches sources

- Writes an article draft in Google Docs

- Logs the doc link in a Google Sheet next to your keyword

I want to build an agent that looks at keywords in Google Sheet, researches top ranking articles, and creates a draft for me in a Google Doc every day at 9am

Build an agent that prepares me for sales meetings by researching the prospect company, finding recent news, and creating a short summary

Create an agent that tracks key product signals from Hubspot and posts a weekly internal update in Notion/Slack

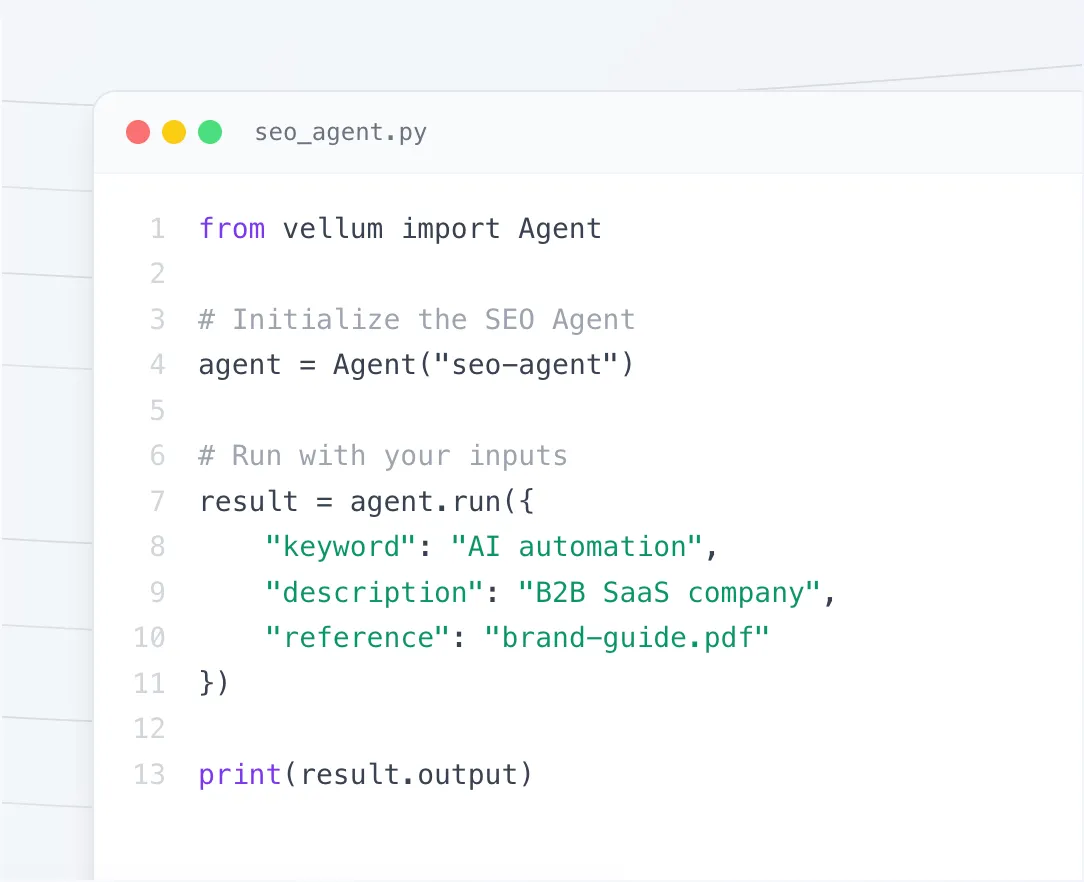

Built-in triggers

Run your AI workflows on a schedule or make them respond to app triggers.

1000+ Integrations

Build agents that connect to your tools and run on your behalf.

No hosting fees

Run your workflows as much as you need without surprise charges.

Understand how your agent makes decisions

Preview the full workflow before it runs.

Discover what's happening at every step.

Run details

8.30s total

Daily 7 AM

0.01s

Get Calendar Events

0.01s

Filter Standups

0.01s

Meeting Prep Map

0.01s

Compile Summary

0.01s

Send to Slack

0.01s

Output details

Calendar Events

Found 5 external meetings scheduled for today including client calls and partner syncs.

Summary Compiled

Found 3 standups for today. Prepared meeting briefs for Engineering Sync, Product Review, and Team Retro.

Sent to Slack

Channel: #daily-standups

Message: Today's Meeting Prep Summary

Message: Today's Meeting Prep Summary

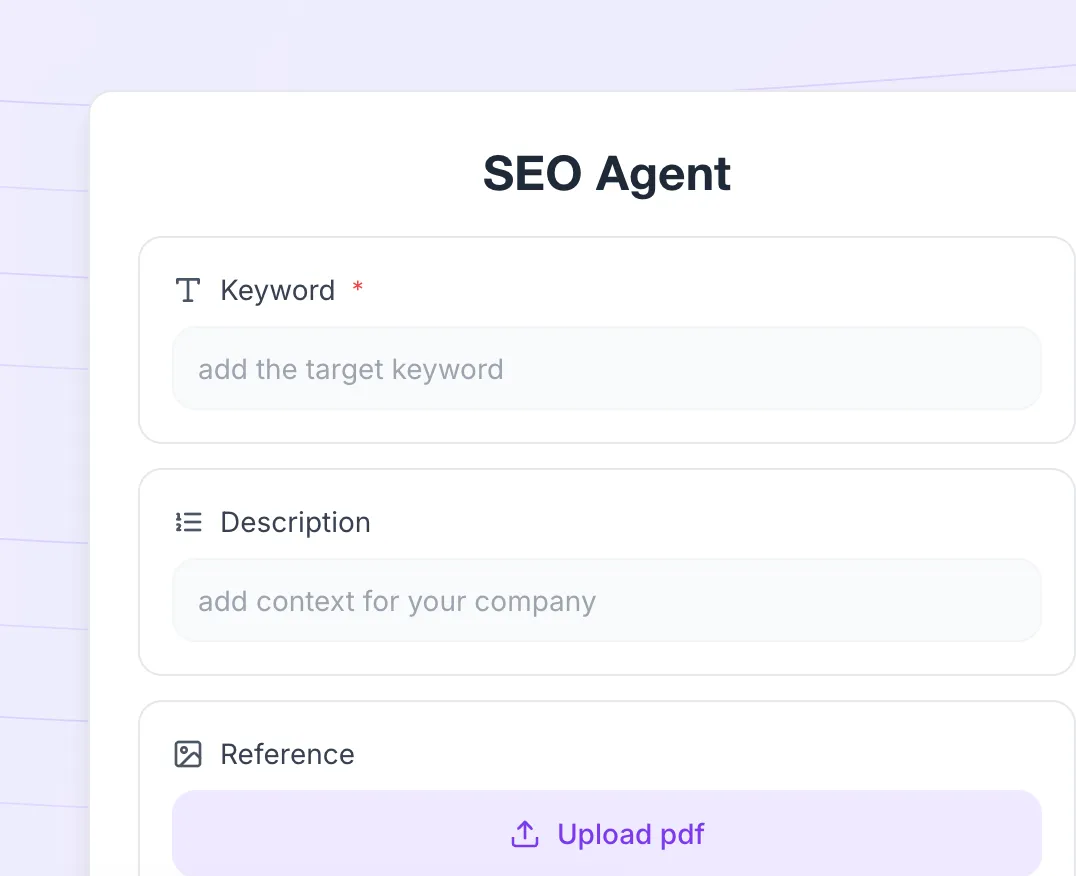

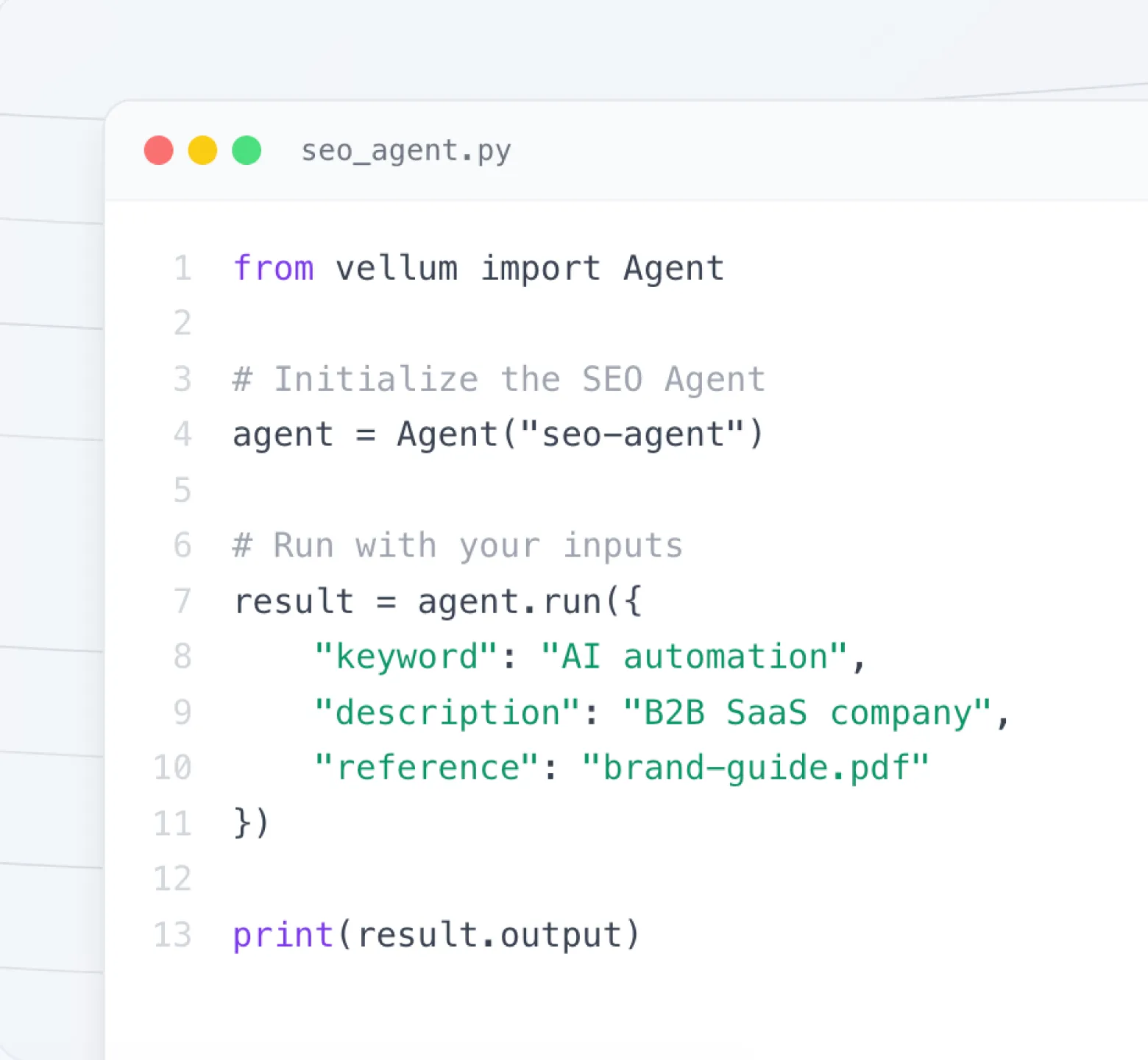

Inspect the generated code and use it locally.

- from vellum.workflows import BaseWorkflow

- from .nodes.compile_summary import CompileSummary

- from .nodes.daily_summary_output import DailySummaryOutput

- from .nodes.filter_standups import FilterStandups

- from .nodes.get_calendar_events import GetCalendarEvents

- from .nodes.map import MeetingPrepMap

- from .nodes.send_to_slack import SendToSlack

- from .triggers.scheduled import Daily7Am

- class Workflow(BaseWorkflow):

- graph = {

- GetCalendarEvents >> FilterStandups >> MeetingPrepMap >> CompileSummary >> SendToSlack >> DailySummaryOutput,

- Daily7Am >> GetCalendarEvents,

- }

- class Outputs(BaseWorkflow.Outputs):

- success = DailySummaryOutput.Outputs.value

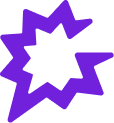

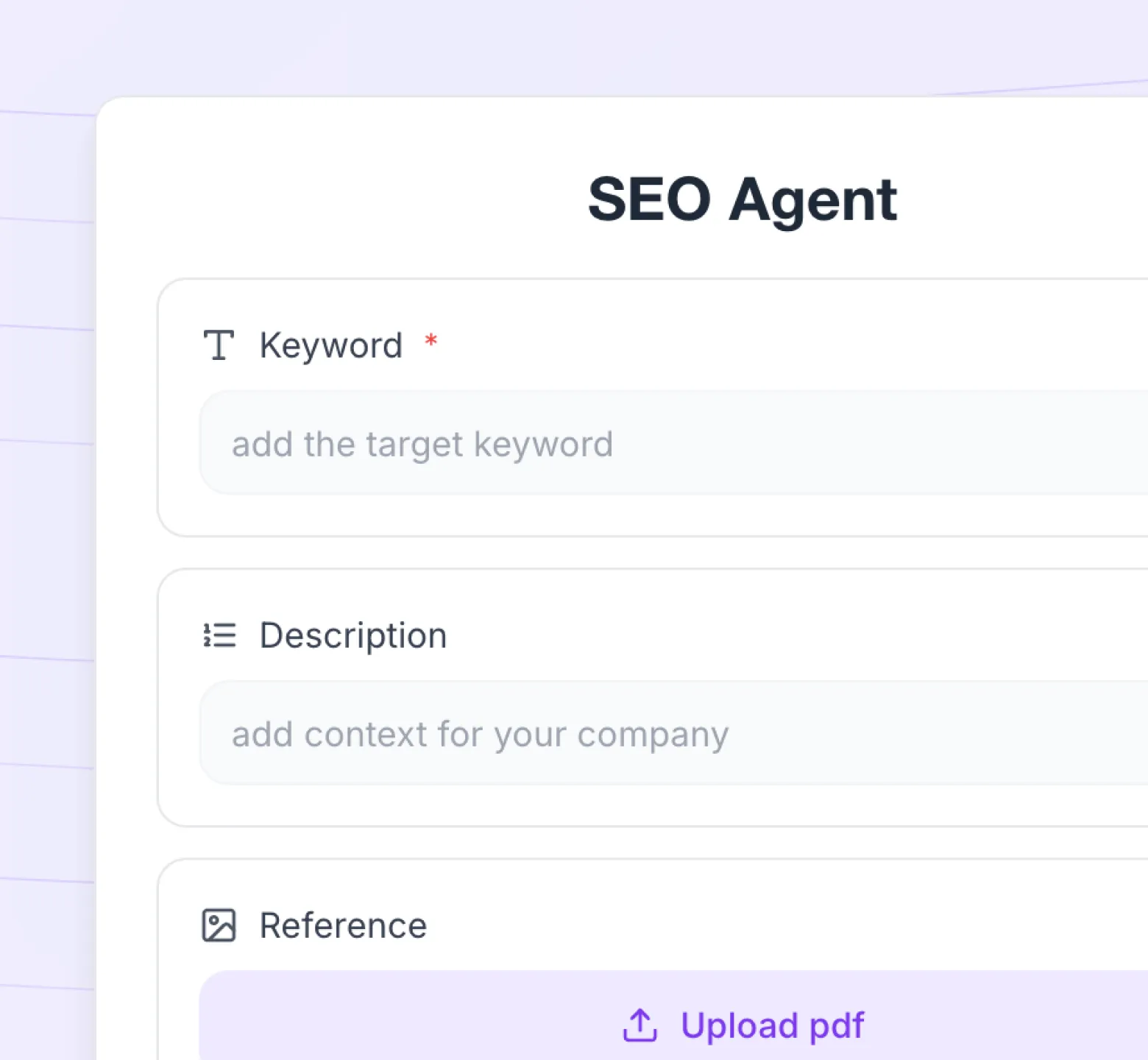

Interact with your agents

Use a built in UI for quick interactions, and switch to other modes when you need more control.

Use via UI

Run your agent through a built in UI.

Run from code

Call the agent from your app or backend.

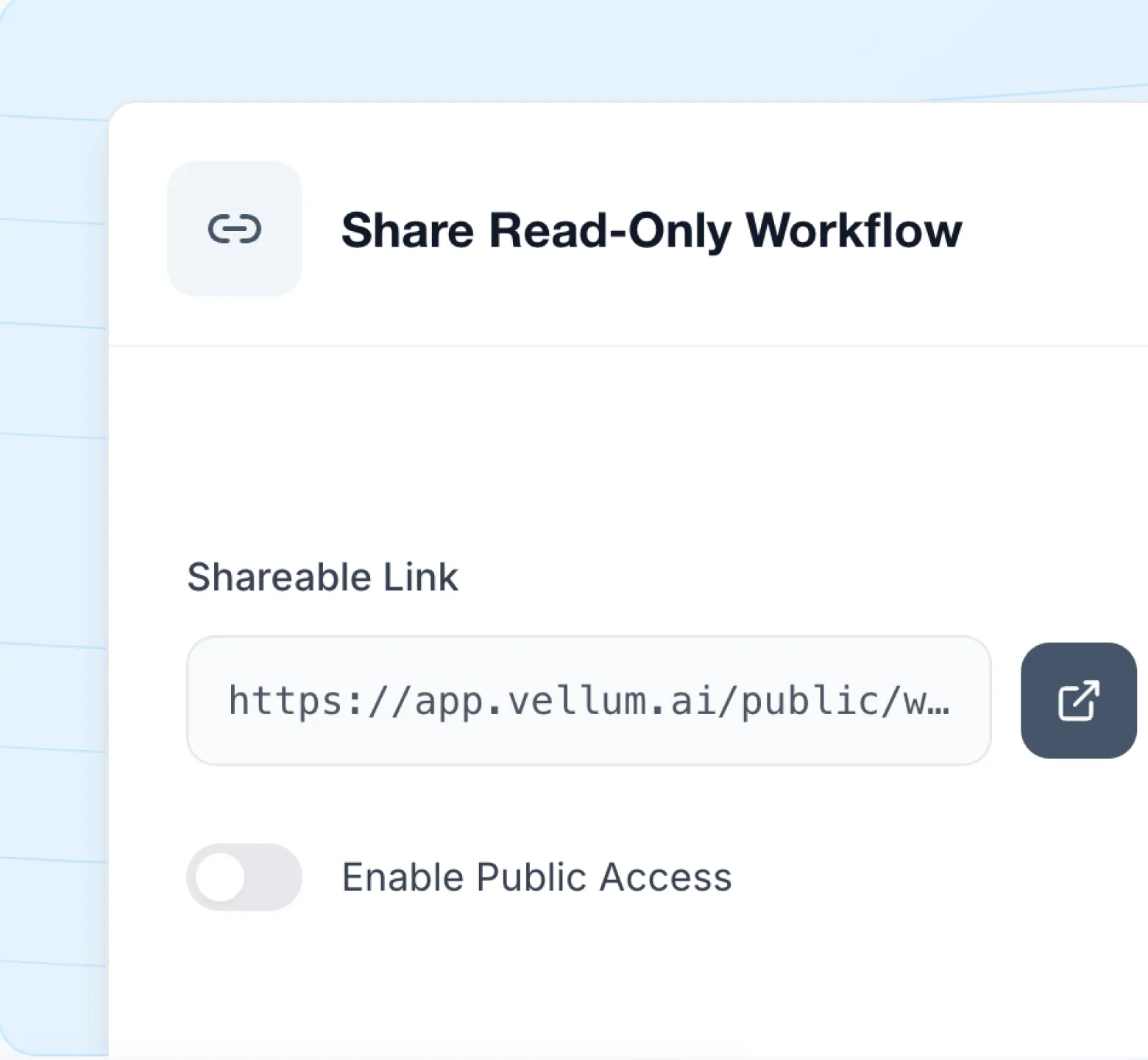

Share publicly

Share a read only version your team can duplicate.

Custom UI

Vibe-code your own custom interface.

Forget generic templates. Just use plain english.

Get started by describing your process. Vellum takes care of the rest.