So many customers requested these models, so now we have a native integration with IBM Granite 13b Chat V2, Granite 20b Multilingual and the smaller Granite 3.2-8b Instruct model.

About the models

Granite-13b-chat-v2 is a chat-focused model tuned to work better with RAG use cases. In version 2.1.0, IBM introduced a new alignment method designed to boost how well general LLMs perform. This method improves the base model early on by adding useful knowledge, then sharpens how it follows instructions by teaching it skills and tone in a later phase.

Granite-20b-multilingual uses a new training approach too. Instead of doing massive pre-training followed by smaller alignment, it focuses on large-scale, targeted alignment from the start. The goal is to build a general-purpose model that works well not just for chat and RAG, but also for a wide range of NLP and downstream tasks.

Granite 3.2-8b Instruct model: This model is designed to handle general instruction-following tasks and can be integrated into AI assistants across various domains, including business applications.

With this integration, you can see how these models perform for your use cases — and compare them side by side with others.

How to enable the models

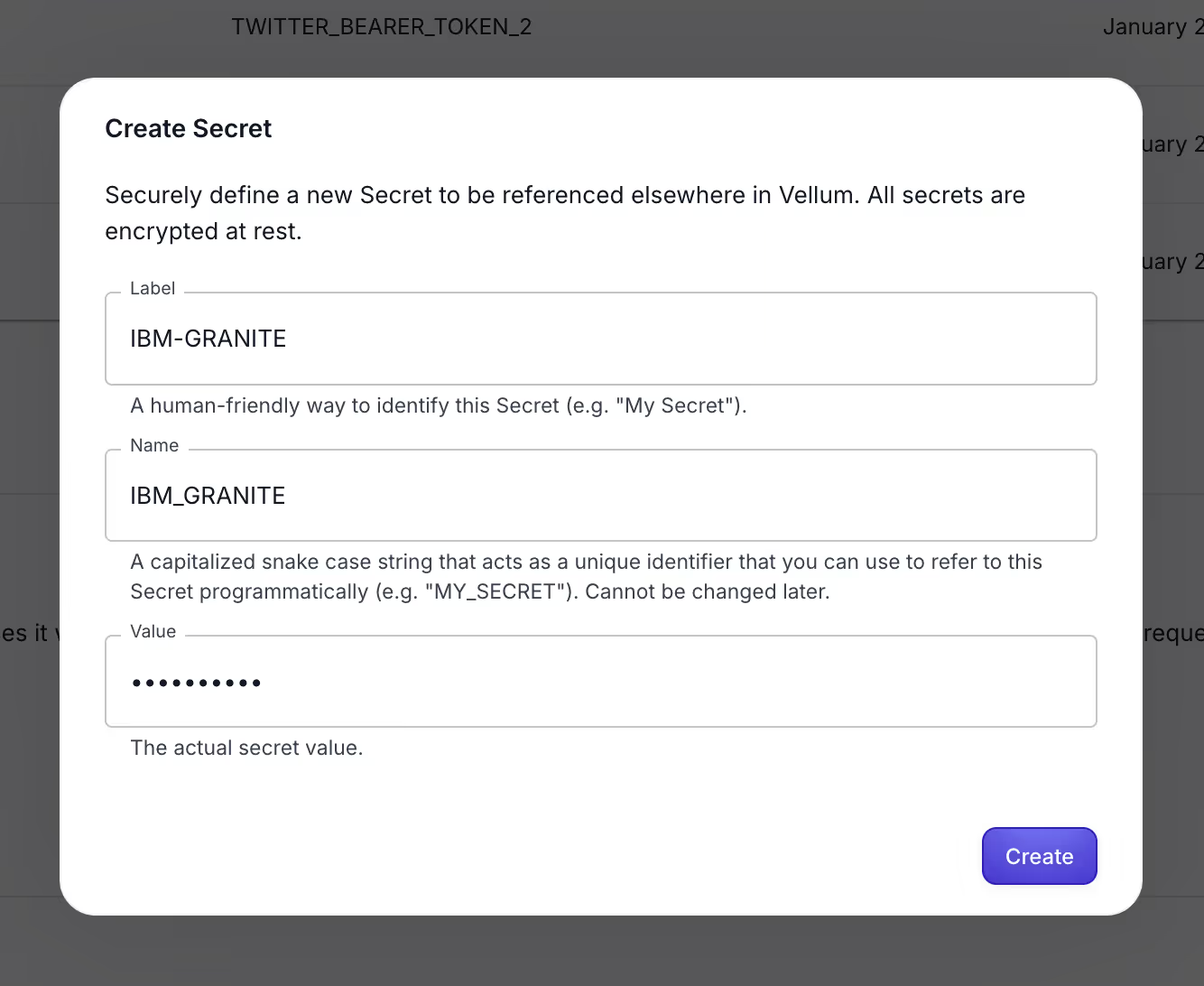

The models are now available to add to your workspace. To enable one, you need to get your API key from your your IBM profile, and add it as a Secret named IBM in the “API keys” page:

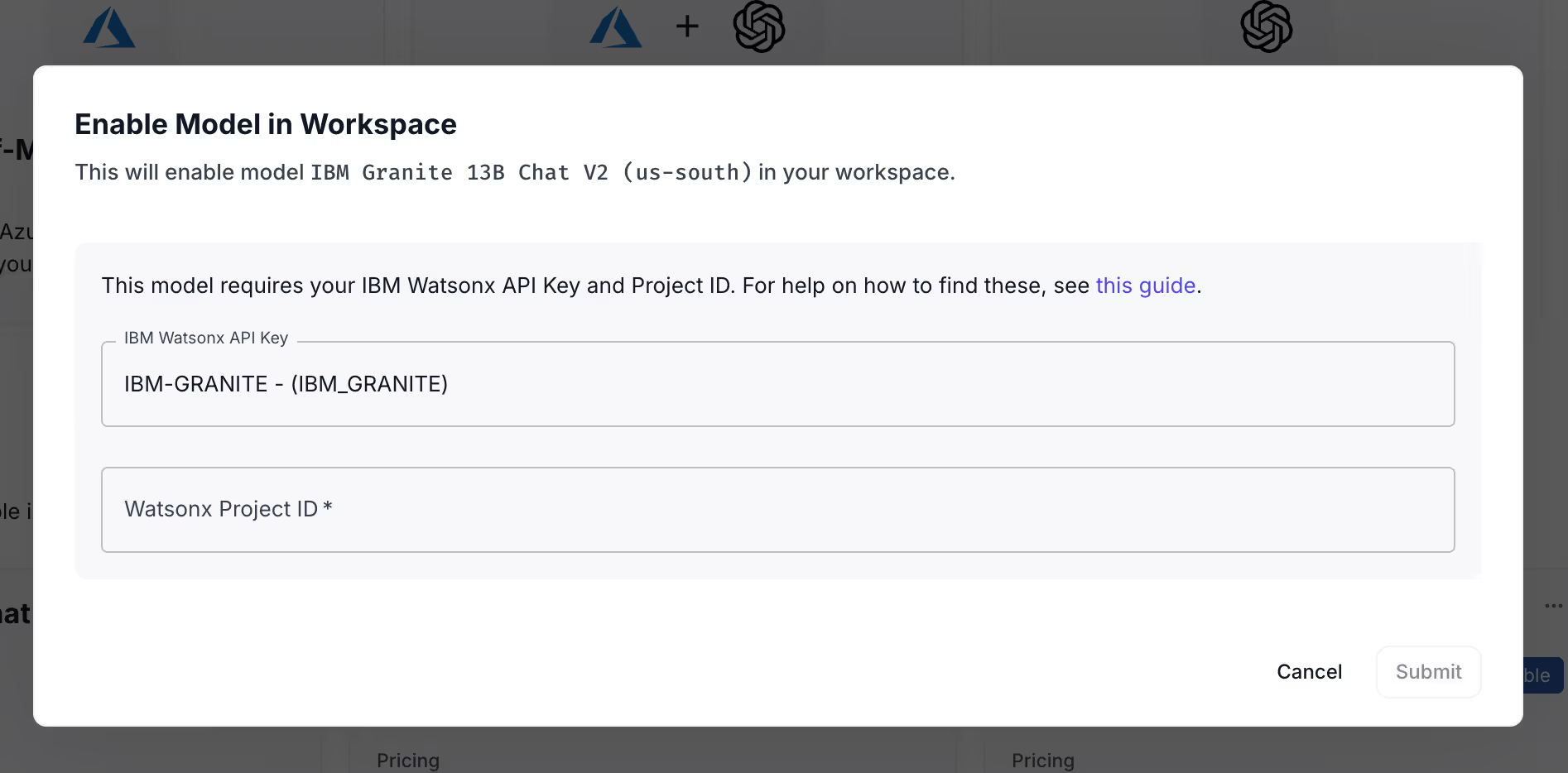

Then click on the “Model’s” tab, and add the API key and your Project ID for the specific “IBM granite” model that you want to enable:

Then, in your prompts and workflow nodes, simply select the model you just enabled:

Compare with other models

Not sure which model performs best for your use case?

With Vellum Evaluations, you can easily test and compare different LLMs side-by-side — including IBM, OpenAI, Anthropic, Google, and more. We give you the tools and best practices to evaluate accuracy, consistency, and helpfulness so you can ship AI features that actually work in production.

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

.png)