Another great battle is taking shape in the world of Generative AI. Just like the “fine-tuning vs. RAG” debate, a new line is being drawn in the sand, sparked by two major players. The question on the table: should AI builders focus on complex multi-agent systems, or is a new discipline the true path to production-ready agents?

The debate kicked off when Cognition, the company behind the AI coding agent Devin, posted a blog arguing that multi-agent architectures are a "tempting idea" that is "quite bad in practice." They advocate instead for a single-agent approach powered by what they call “Context Engineering.”

Just one day later, Anthropic, creators of the Claude model series, presented a detailed counterargument on how they built their multi-agent research system, showcasing its power for complex, parallelizable tasks.

So, who is right? Like most things in AI, the answer is nuanced. This debate isn't just about technical architecture; it's about a fundamental shift in how we think about building reliable and efficient AI agents.

The Multi-Agent Approach

To understand why Context Engineering is causing a stir, we first need to understand the dominant paradigm for building complex agents: the multi-agent system.

Imagine a highly experienced Senior Manager tasked with delivering a complex project. The client needs it in days, but it would take the manager weeks to complete alone. This manager is our "lead agent."

Luckily, the manager has 20 new interns - our "subagents." They are capable but lack deep experience. The manager breaks the project into 20 tasks, delegates them, and plans to assemble the final product from their work. This is the orchestrator-worker pattern Anthropic describes, and it has clear benefits: massive parallelism and the ability to tackle huge problems.

But problems quickly emerge.

- Lost Context: The manager didn't give each intern the full project brief. The interns don't have the "big picture," so they might optimize for the wrong goal or stray from the main objective.

- Resource Gaps: They don't know where critical information is stored or lack the credentials to access shared drives or company Slack.

- Compounding Errors: Even if 15 interns perform perfectly, the compounded errors of the other five can jeopardize the entire project, creating more work for the manager to fix.

This is the challenge of multi-agent systems. While powerful in theory, they introduce immense overhead in coordination, context sharing, and error handling. Each subagent is a potential point of failure, and their mistakes can cascade.

Enter Context Engineering

Cognition’s argument is that instead of trying to manage a team of flawed interns, we should focus on empowering the expert - the single agent. This is the core of Context Engineering.

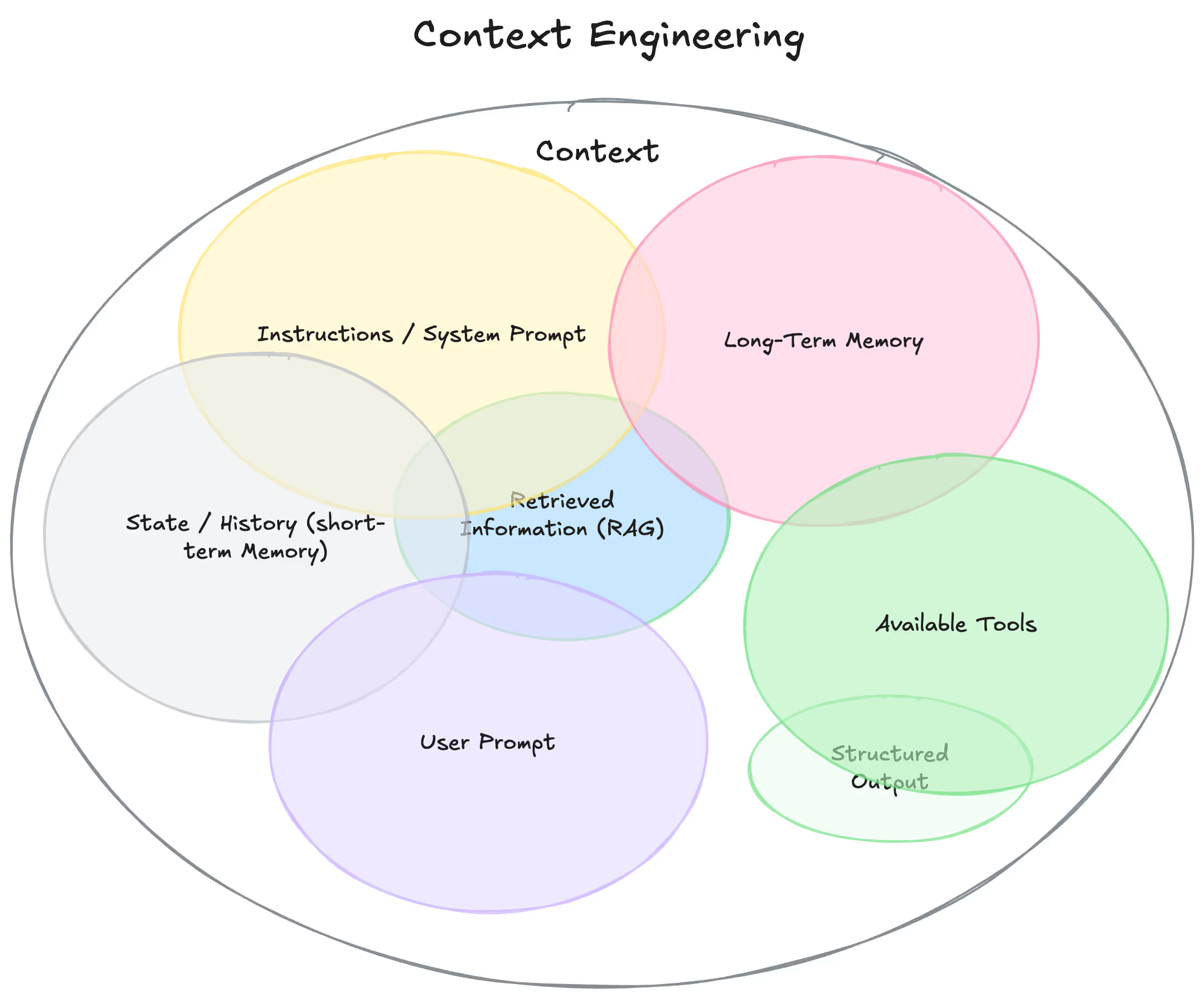

If prompt engineering is the art of crafting the perfect initial instruction, Context Engineering is the discipline of dynamically managing the entirety of information an agent sees at every step of a complex task. It’s about ensuring the LLM has precisely the right information, at the right time, in the right format.

As Andrej Karpathy, former Director of AI at Tesla and founding member of OpenAI, beautifully put it, it's the "delicate art and science of filling the context window with just the right information for the next step."

Let’s revisit our analogy. Instead of hiring 20 interns, imagine giving our Senior Manager a magical, dynamic briefing book.

- When they start a task, the book shows them the high-level project goals.

- When they need to access a file, the book automatically opens to the page with the file's contents and relevant permissions.

- When they finish a step, the book summarizes the key decisions made and tidies itself up, preparing for the next task.

This magical book is Context Engineering in action.

It’s a single-threaded, linear process that avoids the coordination chaos of multi-agents. By meticulously controlling the context, the single agent can maintain coherence, reduce errors, and perform with greater reliability. The rise of models with massive context windows (like Gemini 2.5 and Claude 4) has made this approach more viable than ever before.

Why This Isn't Just an Academic Debate

The choice between these architectures has direct consequences for any team building with AI.

- Cost: Multi-agent systems can burn through tokens at an alarming rate. Anthropic notes their research agents use ~15x more tokens than a standard chat interaction. Context Engineering aims for efficiency by only including what’s necessary.

- Reliability: The "game of telephone" between subagents can lead to unpredictable, emergent errors that are a nightmare to debug. A well-engineered single agent follows a more predictable, traceable path.

- Complexity: Building, testing, and maintaining a multi-agent system is inherently more complex. You’re not just managing prompts; you're managing inter-agent communication protocols.

The truth is, the line is blurry. Even a single AI agent needs to retrieve information (a form of context), and a multi-agent system is useless if the individual agents have poor context. The real takeaway is that effective context management is non-negotiable.

How Vellum Helps You Become a Context Engineer

This brings us to the practical question: how does a developer actually do Context Engineering? It requires a robust toolkit for experimentation, evaluation, and production management. This is where Vellum becomes your control panel for building sophisticated agents.

- Orchestrating Complex Chains: Context Engineering isn't about one massive prompt. It's about a sequence of steps: retrieve user data, search a vector database, call a tool, summarize previous turns, and then assemble it all into a final prompt for the LLM. Vellum's Workflows are designed for this, allowing you to visually build and manage these multi-step context-building chains without writing mountains of boilerplate code.

- Experimenting with Context Strategies: Is it better to provide full API documentation or just a summary? Should you include the last three conversation turns or a condensed summary? These are questions you can only answer through testing. With Vellum's Scenarios and Test Suites, you can run head-to-head comparisons of different context strategies against your key use cases and see which performs better on a granular level.

- Evaluating the Outcome: A change in context can have subtle effects on LLM reliability. You need a reliable way to measure if your change improved factual accuracy, reduced hallucinations, or produced a better-formatted output. Vellum’s evaluation tools - from semantic similarity and Regex checkers to powerful LLM-as-Judge evaluators - allow you to define what "good" looks like and objectively measure the impact of your AI engineering efforts.

By combining powerful orchestration, experimentation, and evaluation, Vellum provides the infrastructure needed to move from basic prompting to true Context Engineering.

The Path Forward

The "multi-agent vs. single-agent" debate is a sign of a maturing industry. We're moving past simple chatbots and tackling stateful, long-running tasks that demand new levels of engineering discipline.

The future isn't a victory for one side, but a synthesis of both. The best systems will likely be hybrid:

- A top-level "Context Engineer" agent will manage the overall task.

- For highly parallelizable sub-problems (like searching 10 different documents at once), it may spin up temporary, specialized agents.

- Crucially, each of these temporary agents will itself benefit from meticulously engineered context.

The next wave of breakthroughs in AI won't just come from bigger models. It will come from the developers and engineers who master the art of context. It’s time to stop thinking of ourselves as just prompt engineers and start becoming context engineers.

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

Experiment, Evaluate, Deploy, Repeat.

AI development doesn’t end once you've defined your system. Learn how Vellum helps you manage the entire AI development lifecycle.

.png)