Wait, it’s already November?! 😱 That means another product update! Here’s all that’s new to Vellum from October, 2023. This month we focused on making it possible to integrate with any LLM hosted anywhere, deepening what’s possible with Test Suites, and improving the performance of our web application. Let’s take a closer look!

Model Support

Universal LLM Support

We’ve been hard at work for the past two months rebuilding the core of Vellum’s backend. The end result? We can now support just about any LLM, anywhere, including custom and privately hosted models.

We’ve since helped customers integrate:

- Fine-tuned OpenAI models

- OpenAI models hosted in Microsoft Azure

- Anthropic models hosted in AWS Bedrock

- WizardMath models hosted on Replicate

- And more!

This means you can use Vellum as a unified API for interacting with any LLM, as well as benchmark them against one another.

If you have a custom model hosted somewhere we don’t yet support, let us know! We’d happily add it to our queue.

Models Page

With the introduction of universal LLM support, we’ve realized that it’s more important than ever to be able to discover what models are out there, what they’re good for, and hide those that aren’t relevant to your organization. To that end, we’ve introduced the new “Models” page, accessible from the side navigation. Larger organizations might use this to restrict which models their employees are allowed to use within Vellum.

Test Suites & Evaluation

LLM Evaluation is a big focus for us right now. The first new features to come out the gate here are…

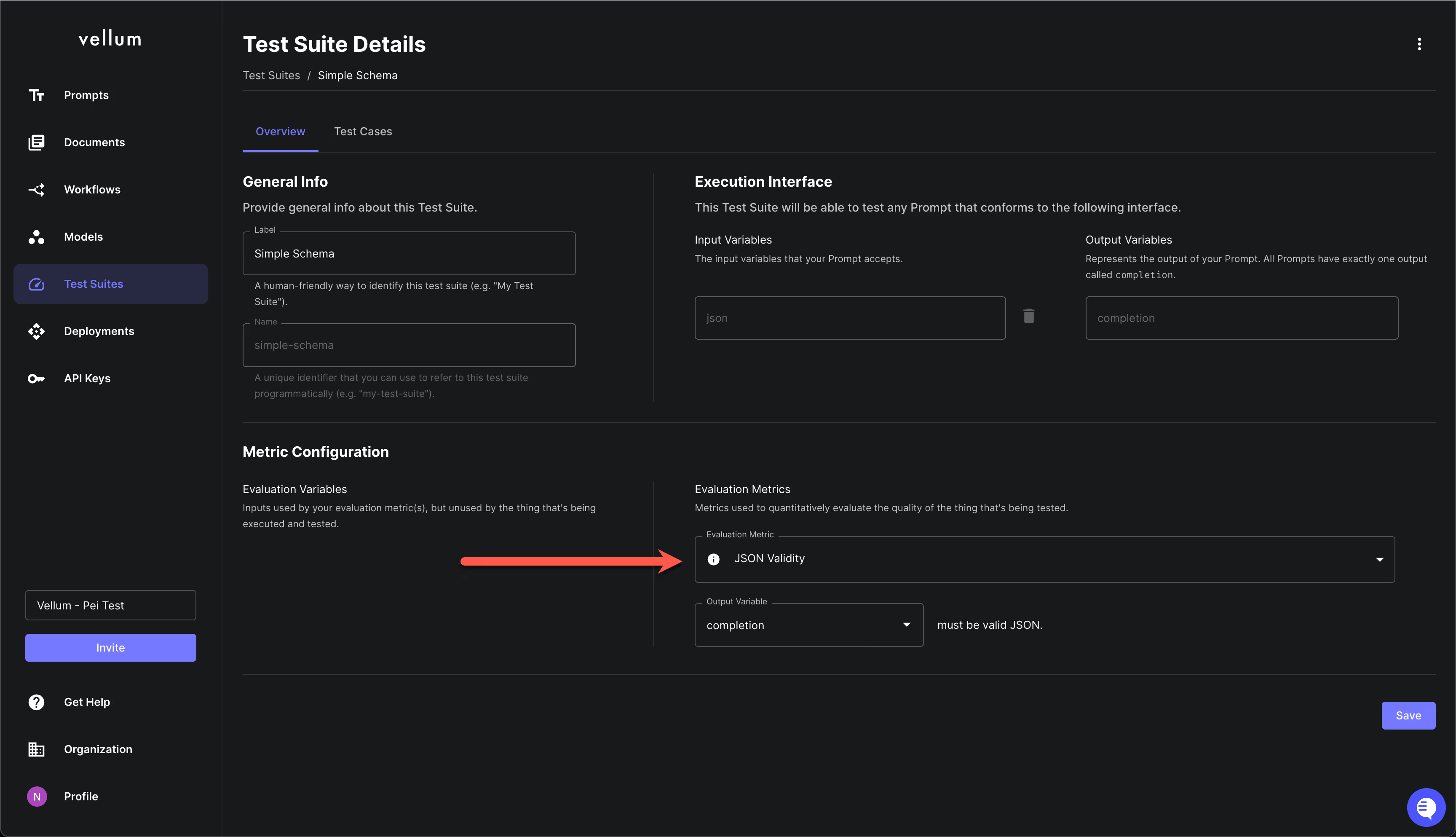

JSON Validity Eval

You can now configure a Test Suite to assert that the output of a Prompt is valid JSON. This is particularly useful when building data extraction prompts or when using OpenAI’s function calling feature.

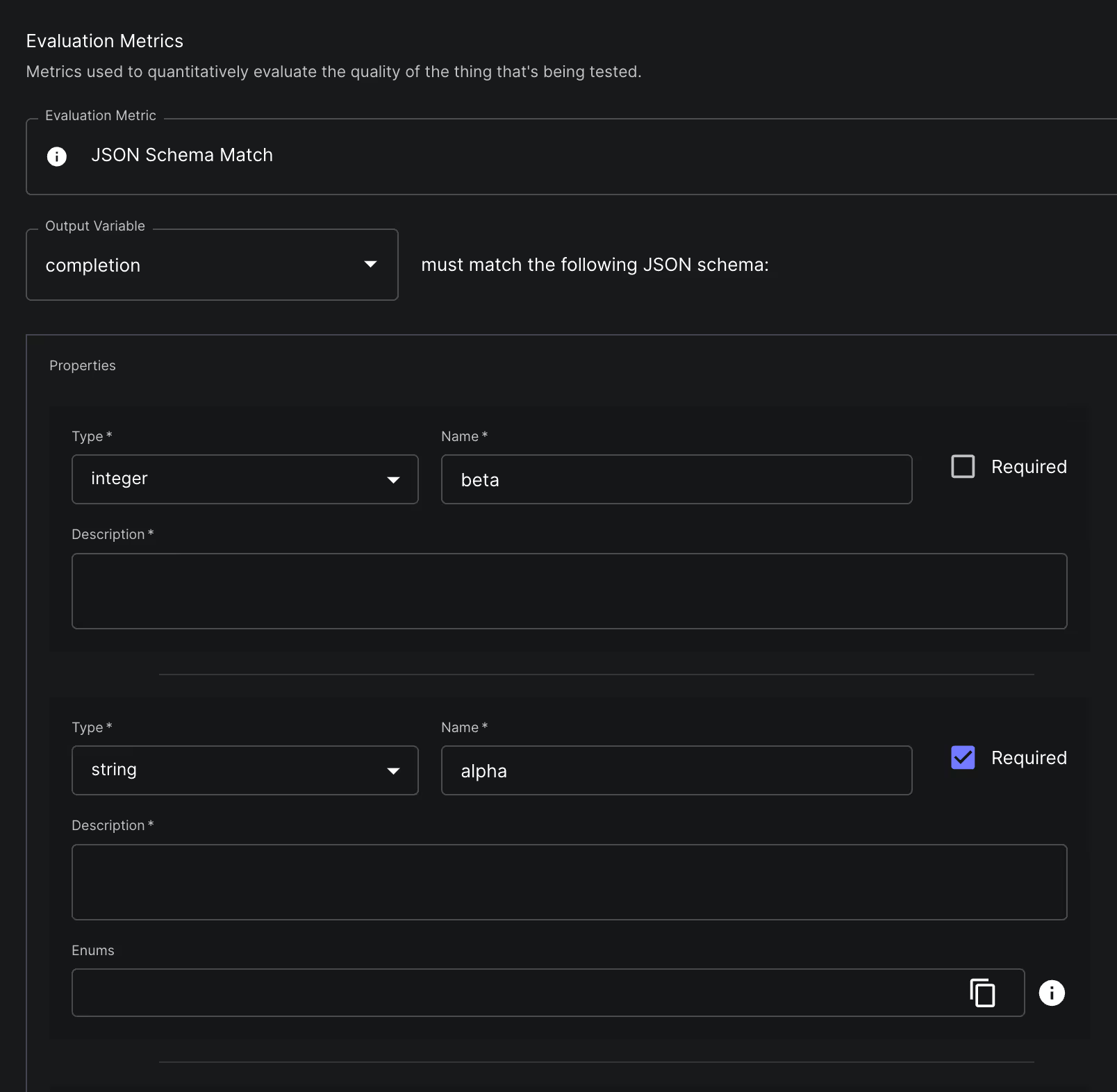

JSON Schema Match Eval

In addition to asserting that a Prompt outputs valid JSON, you might also want to assert that the output JSON conforms to a specific shape/schema. This is where JSON Schema Match comes in.

You can use Test Suites to define the schema of the expected JSON output, and then make those assertions. This is crucial when developing data extraction pipelines.

Cancelling Test Suite Runs

It used to be that once you kicked off a Test Suite Run, there was no going back. Now, you can cancel a run while it’s queued/running. This is particularly helpful if you realize you want to make more tweaks to your Prompt and don’t want to waste tokens on a now-irrelevant run.

Prompt Deployments

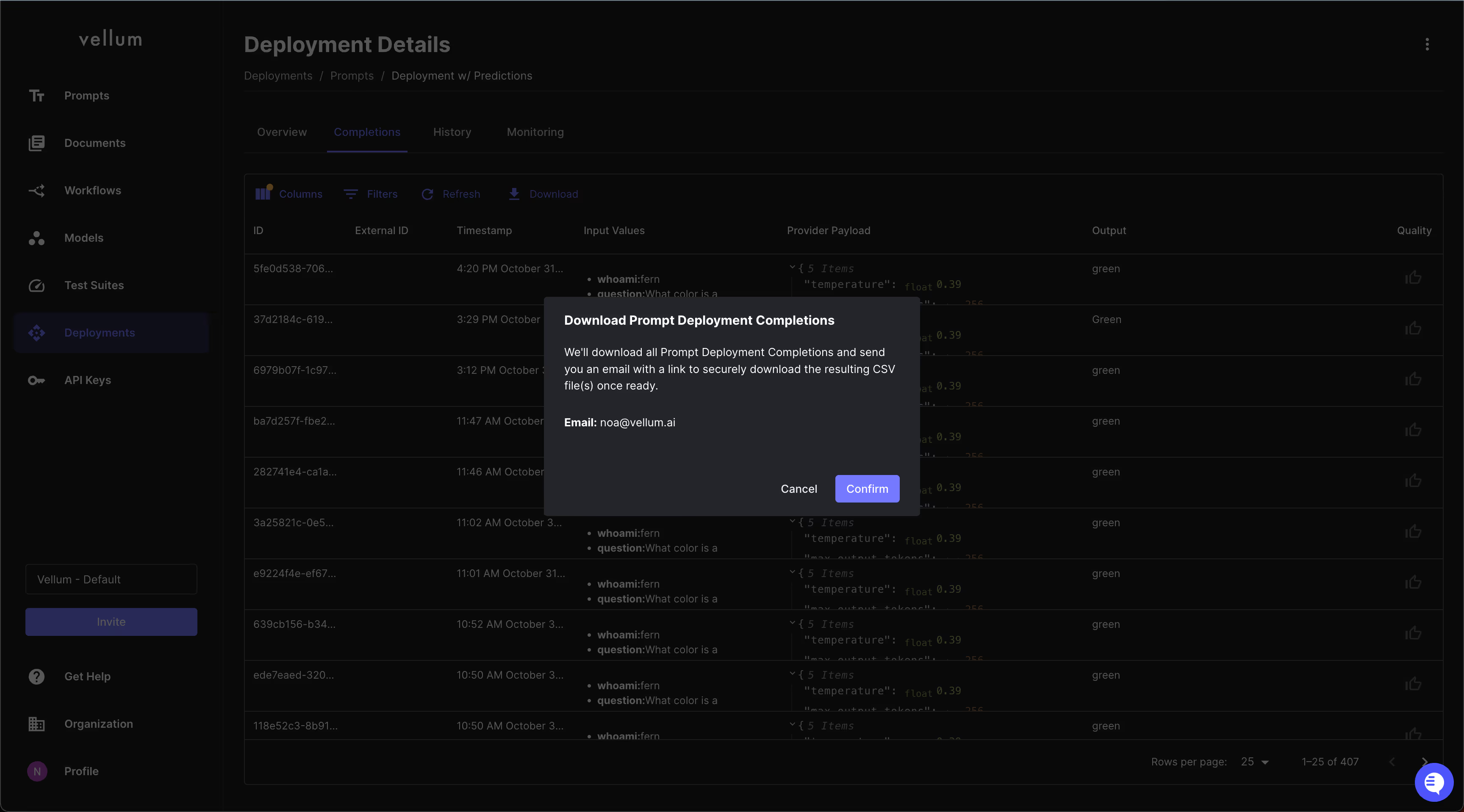

Monitoring Data Export

Vellum captures valuable monitoring data every time you invoke a Prompt Deployment via API and provides a UI to see that data. However, we’re a strong believer that this data is ultimately yours and we don’t want to hold it hostage.

To follow through on this commitment, we’ve made it easy to export your Prompt Deployment monitoring data as a csv. This can be helpful if you want to perform some bespoke analysis, or fine-tune your own custom model. Here’s an in-depth demo of how this works.

UI/UX Improvements

Performance

We’ve made a big push this month to make our Prompt and Workflow engineering UIs more performant. You should now be able to experiment with complex Prompts & Workflows without being slowed down by lagginess. If you experience any lingering issues, please let us know!

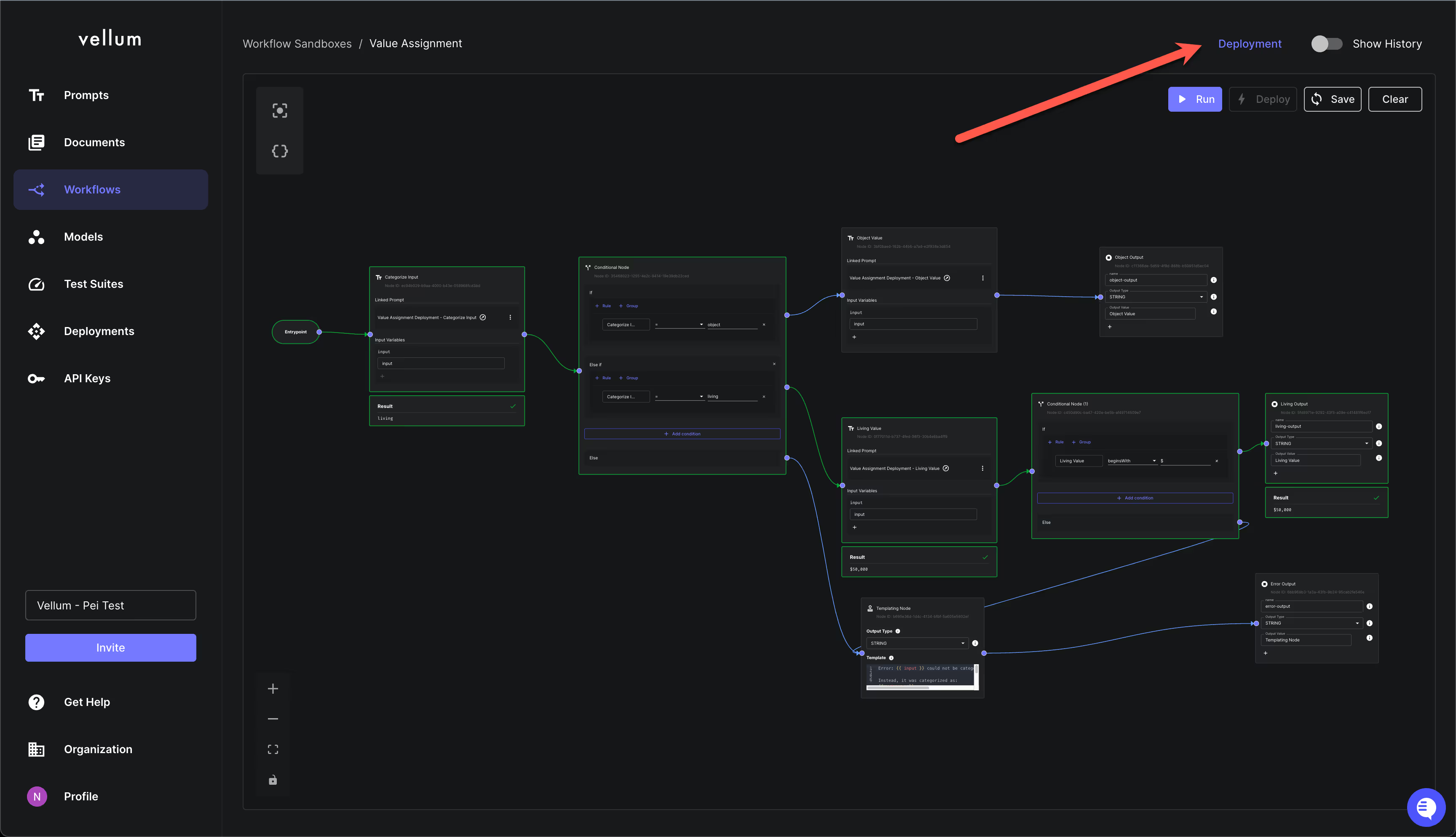

Back-linking from Workflow Sandboxes to Deployments

It’s always been possible to navigate from a Workflow Deployment to the Sandbox that generated it. However, we didn’t have any UI to perform the reverse navigation. Now we do!

We’ll soon have the same for Prompt Sandboxes → Deployments.

Re-sizing Workflow Scenario Inputs

You can now re-size the inputs to Workflow Scenarios so that you can see all the contents within.

API

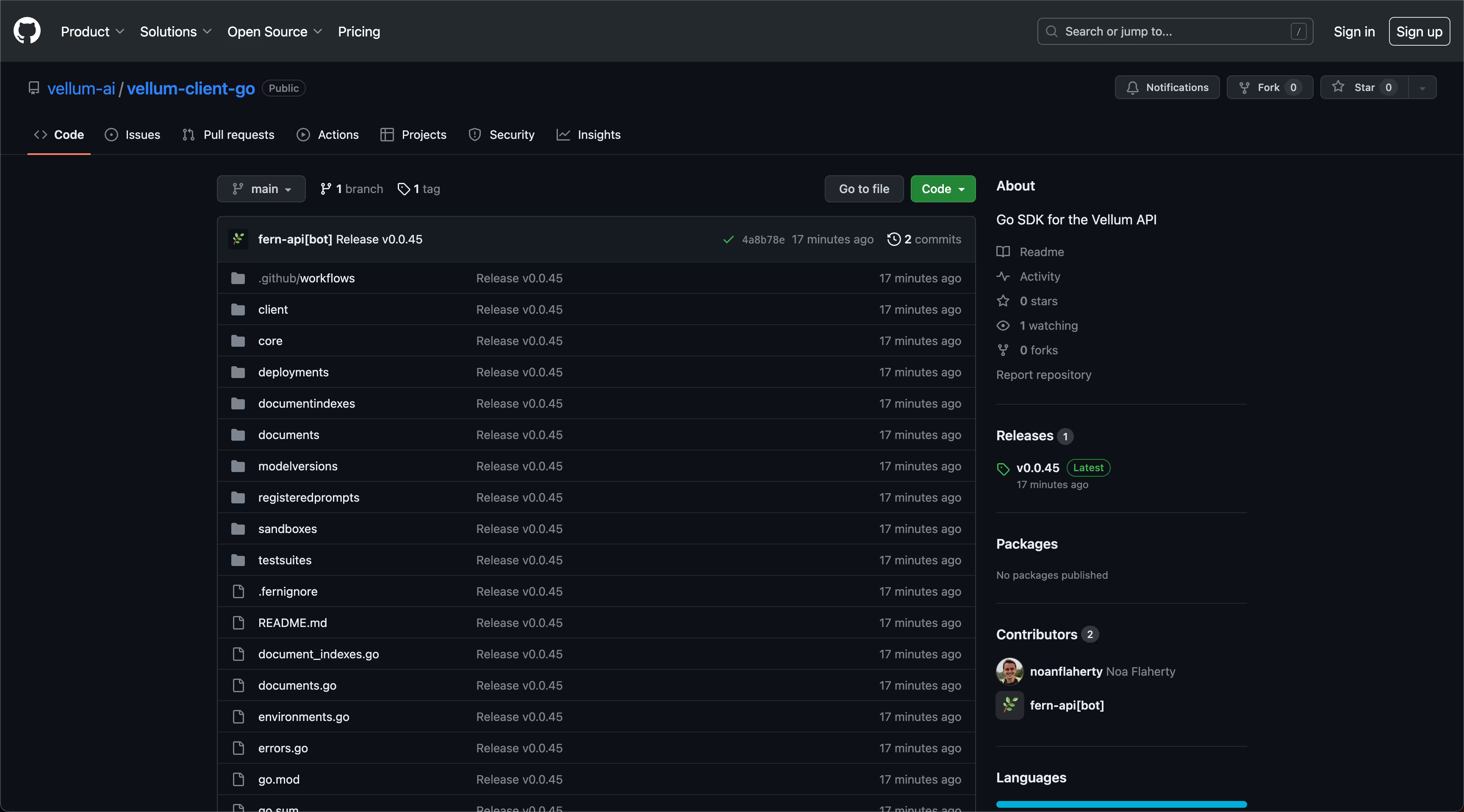

New Go Client

We now have an officially supported API Client in Go! 🎉 You can access the repo here. Big shout out to our friends at Fern for helping us support this!

Looking Ahead

We’re already well-underway working on some exciting new features that will roll out in November. Keep an eye out for Test Suite support for Workflows, a deepened monitoring layer, UI/UX improvements for our Prompt & Workflow sandboxes, and more!

As always, please continue to share feedback with us via Slack or our Discord here: https://discord.gg/6NqSBUxF78

See you next month!

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

.png)