Hello everyone and welcome to the first official product update from Vellum! We plan to do these more regularly to share what we’ve shipped on and what’s coming next, but for this first one, we have quite a build-up of new features from the past few weeks to share!

Let’s go through each, one product area at a time.

Playground

Streaming Support

We’ll now stream output immediately as it becomes available, giving quick insight into whether things are going off track.

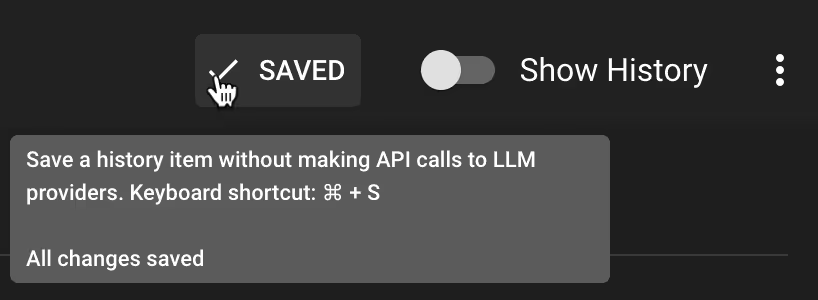

Auto-Save

We’ll now automatically save progress after 60 seconds of inactivity. The save button is now moved to the top right and indicates whether there are unsaved changes.

Jinja Templating Support

Vellum prompts now support Jinja templating syntax, allowing you to dynamically construct your prompts based on the values of input variables. This is a powerful feature that our customers (and even we!) are only just beginning to understand the full capabilities of.

Support for the Open Source Falcon-40b LLM

Open Source LLMs are becoming more and more powerful and increasingly tempting to use. Falcon-40b is one of the best available today and is worth taking a look at if you’re trying to reduce costs.

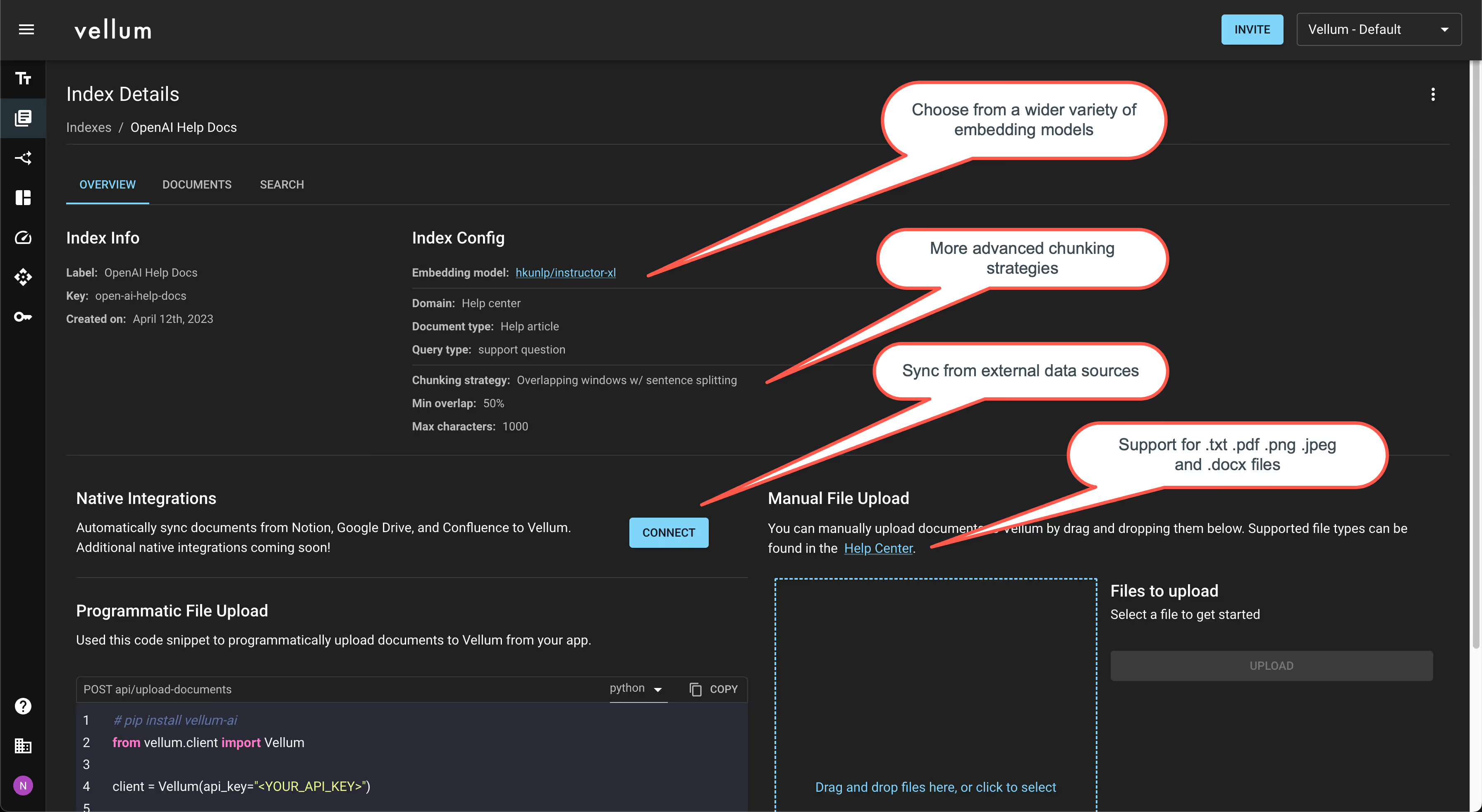

Search & Document Retrieval

We’ve made a multitude of improvements to Vellum’s document indexing and semantic search capabilities, including support for:

- Uploading more file formats: .txt, .docx, .pdf, .png, .jpeg

- Additional embedding models, including the widely used hkunlp/instructor-xl from Hugging Face.

- Connecting to external data sources like Notion, Slack, and Zendesk so that you can search across your own internal company knowledge bases.

- More sophisticated text chunking strategies

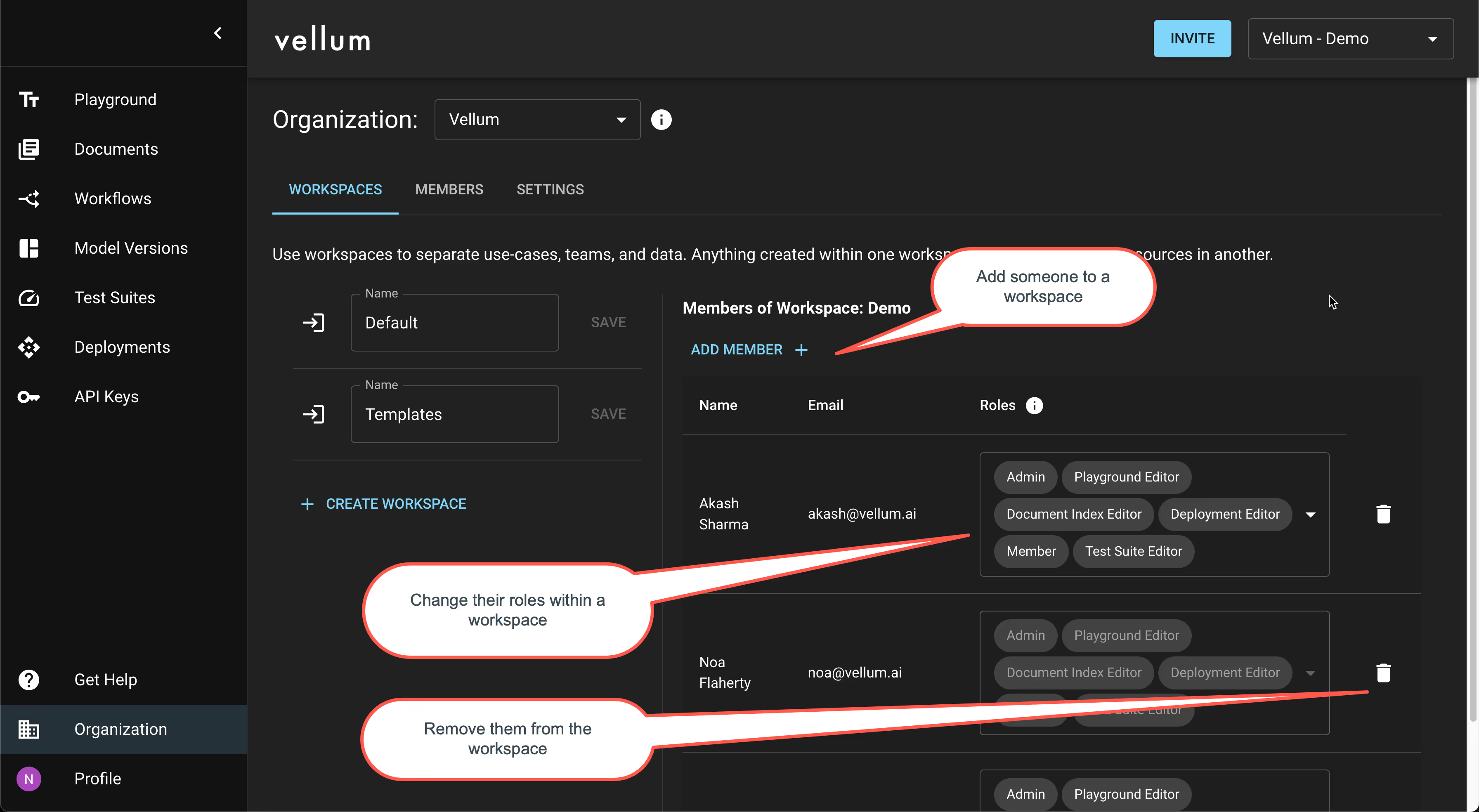

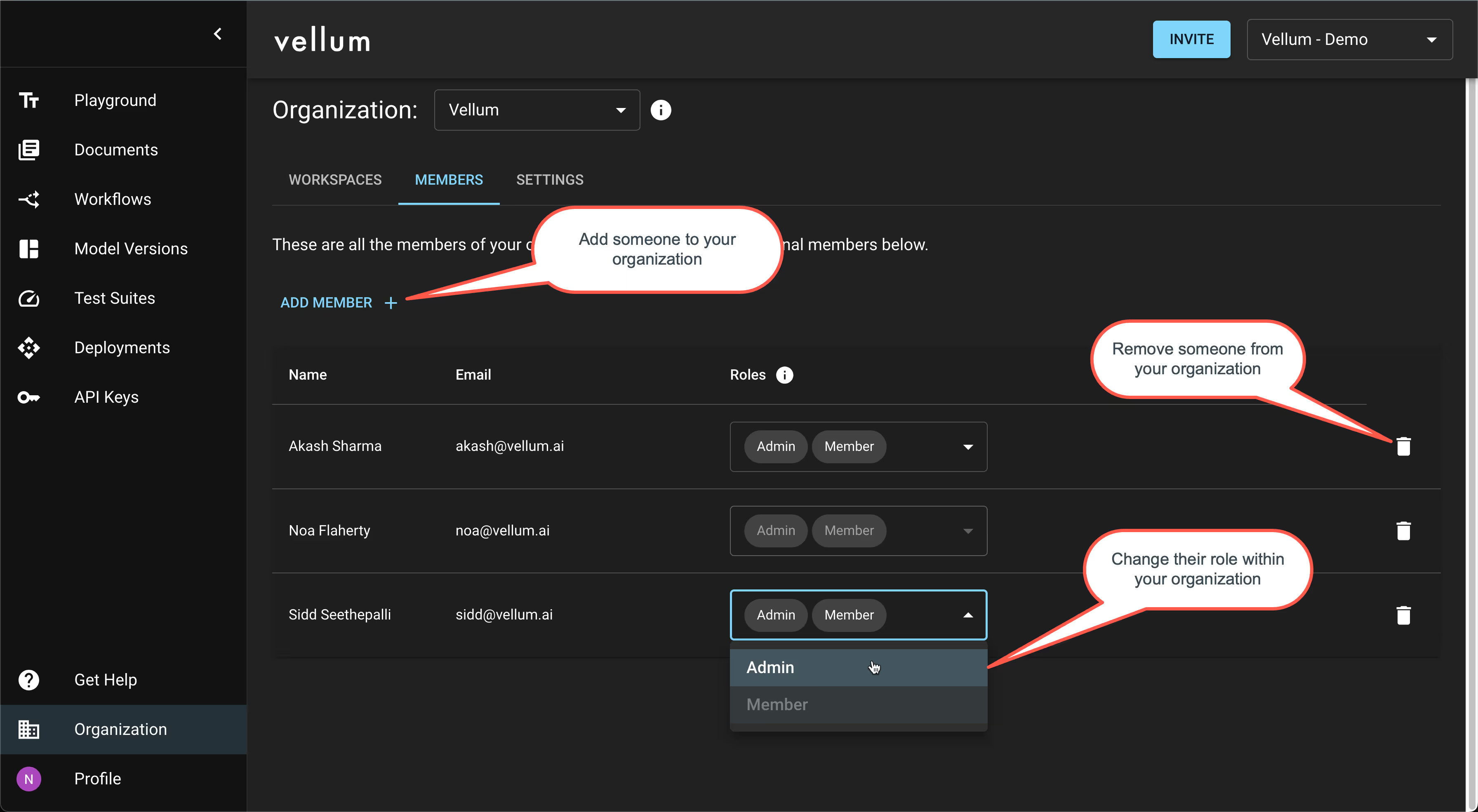

Role-Based Access Control

We now offer Role-Based Access Control to give enterprises greater control over who’s allowed to do what. You can now have non-technical team members iterate on and experiment with prompts, without allowing them to change the behavior of production systems. Once they get to a better version of a prompt, they can get help from someone who has broader permissions to deploy it.

Test Suites

We continue to invest heavily in testing and evaluating the quality of LLM output at scale.

New Webhook Evaluation Metric

You can now stand up your own API endpoint with bespoke business logic for determining whether an LLM’s output is “good” or “bad” and Vellum will send you all the data you need to make that decision, then log and display the results you send back. You can learn more here.

Re-Running Select Test Cases

You can now re-run select test cases from within a test suite, rather than being forced to re-run them all.

API Improvements

One philosophy that we have at Vellum is that anything that is possible through the UI should be possible via API too. We continue to invest in making Vellum as developer-friendly as possible.

New API Docs

We have a slick new site to host our API docs, courtesy of our friends at Fern. You can check out the docs at https://docs.vellum.ai/

Publicly Available APIs

We’ve exposed more APIs publicly to support programmatic interaction with Vellum, including:

- POST | https://api.vellum.ai/v1/generate-stream

- Used to stream back the output from an LLM using HTTP streaming

- POST | https://api.vellum.ai/v1/test-suites/:id/test-cases

- Used to upsert Test Cases within a Test Suite

- POST | https://api.vellum.ai/v1/sandboxes/:id/scenarios

- Used to upsert a Scenario within a Sandbox

- POST | https://api.vellum.ai/v1/document-indexes

- Used to create a new Document Index

Community & Support

We’ve launched a new Discord server so that members of the Vellum community can interact, share tips, request new features, and seek guidance. Come join us!

In Summary

We’ve been hard at work helping businesses adopt AI and bring AI-powered features into production. Looking ahead, we’re excited to tackle the problem of experimenting with, and simulating, chained LLM calls. More on this soon 😉

We welcome you to sign up for Vellum to give it a try and subscribe to our newsletter to keep up with the latest!

As always, thank you to all our customers that have pushed us to build a great product. Your feedback means the world to us!

Latest AI news, tips, and techniques

Specific tips for Your AI use cases

No spam

Each issue is packed with valuable resources, tools, and insights that help us stay ahead in AI development. We've discovered strategies and frameworks that boosted our efficiency by 30%, making it a must-read for anyone in the field.

This is just a great newsletter. The content is so helpful, even when I’m busy I read them.

.png)