What Our Customers Say About Vellum

Loved by developers and product teams, Vellum is the trusted partner to help you build any LLM powered applications.

.png)

Backed by top VCs including Y Combinator, Rebel Fund, Eastlink Capital, and the founders of HubSpot, Reddit, Dropbox, Cruise, and Instacart

Leverage Vellum to evaluate prompts and models, integrate them with agents using RAG and APIs, then deploy and continuously improve in production.

Empower both technical and non-technical teams to experiment with new prompts and models without impacting production.

%20(1).png)

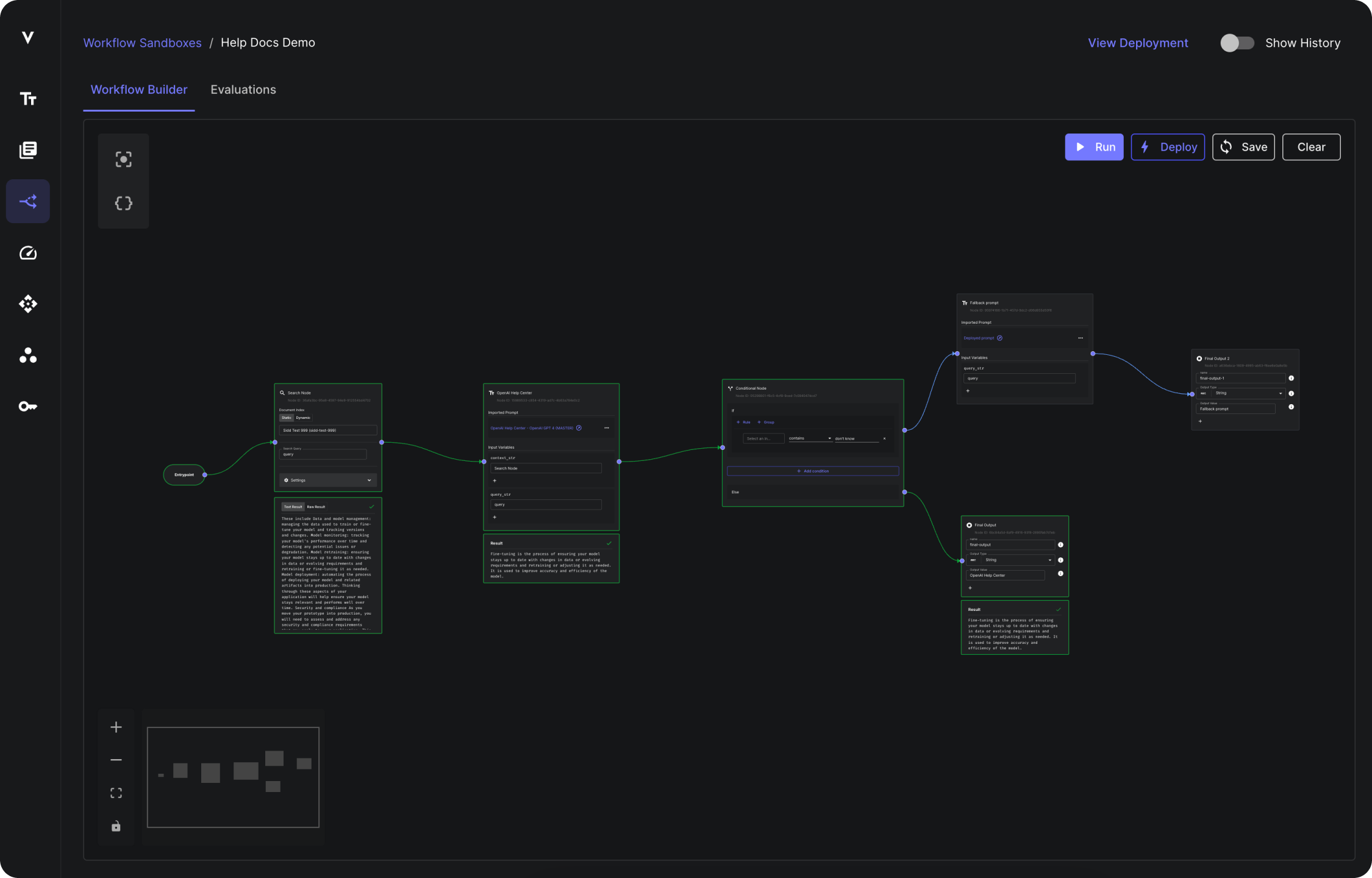

Rapidly prototype, test, and deploy complex chains of prompts and the biz logic between them with powerful versioning, debugging, and monitoring tools.

One endpoint to upload text, one endpoint to search across text. None of the infra, but lots of best practices. Get started with best-in-class RAG in minutes with none of the eng/infra overhead.

Progress past the “vibe check” and add some engineering rigor with quantitative evaluation. Use popular eval metrics or define your own.

Github-style release management for your prompts & prompt chains. Datadog-style monitoring and observability. A tight feedback loop across it all – catch edge-cases in prod and add to your eval set.

Best-in-class security, privacy, and scalability.

Whether you use Vellum or not, building a production-grade AI application requires investment in four key areas.

Your data is your moat. Feed your prompts data unique to your company and customers to create personalized experiences.

Rapid iteration is crucial. Quickly iterate on prompts, compare different models side-by-side, test out new prompt chains, and evaluate your outputs at scale.

.png)

GPT-4, meet software development best practices. GenAI development still requires good ol’ fashioned unit testing, version control, release management, and monitoring.

.png)

Tighten those feedback loops. Catch edge-cases in production, add them to your eval set, and iterate until it passes. Replay old requests against new models to gain confidence before shipping.

.png)

Loved by developers and product teams, Vellum is the trusted partner to help you build any LLM powered applications.

Creating world class AI experiences requires extensive prompt testing, fast deployment and detailed production monitor. Luckily, Vellum provides all three in a slick package. The Vellum team is also lightning fast to add features, I asked for 3 features and they shipped all three within 24 hours!

I love the ability to compare OpenAI and Anthropic next to open source models like Dolly. Open source models keep getting better, I’m excited to use the platform to find the right model for the job

We’ve migrated our prompt creation and editing workflows to Vellum. The platform makes it easy for multiple people at Encore to collaborate on prompts (including non technical people) and make sure we can reliably update production traffic.

Having a really good time using Vellum - makes it easy to deploy and look for errors. After identifying the error, it was also easy to “patch” it in the UI by updating the prompt to return data differently. Back-testing on previously submitted prompts helped confirm nothing else broke.

Creating world class AI experiences requires extensive prompt testing, fast deployment and detailed production monitor. Luckily, Vellum provides all three in a slick package. The Vellum team is also lightning fast to add features, I asked for 3 features and they shipped all three within 24 hours!

Vellum gives me the peace of mind that I can always debug my production LLM traffic if needed. The UI is clean to observe any abnormalities and making changes without breaking existing behavior is a breeze!

Our engineering team just started using Vellum and we’re already seeing the productivity gains! The ability to compare model providers side by side was a game-changer in building one of our first AI features

We’ve worked closely with the Vellum team and built a complex AI implementation tailored to our use case. The test suites and chat mode functionality in Vellum's Prompt Engineering environment were particularly helpful in finalizing our prompts. The team really cares about providing a successful outcome to us.

Vellum’s platform allows multiple disciplines within our company to collaborate on AI workflows, letting us move more quickly from prototyping to production

Vellum gives me the peace of mind that I can always debug my production LLM traffic if needed. The UI is clean to observe any abnormalities and making changes without breaking existing behavior is a breeze!